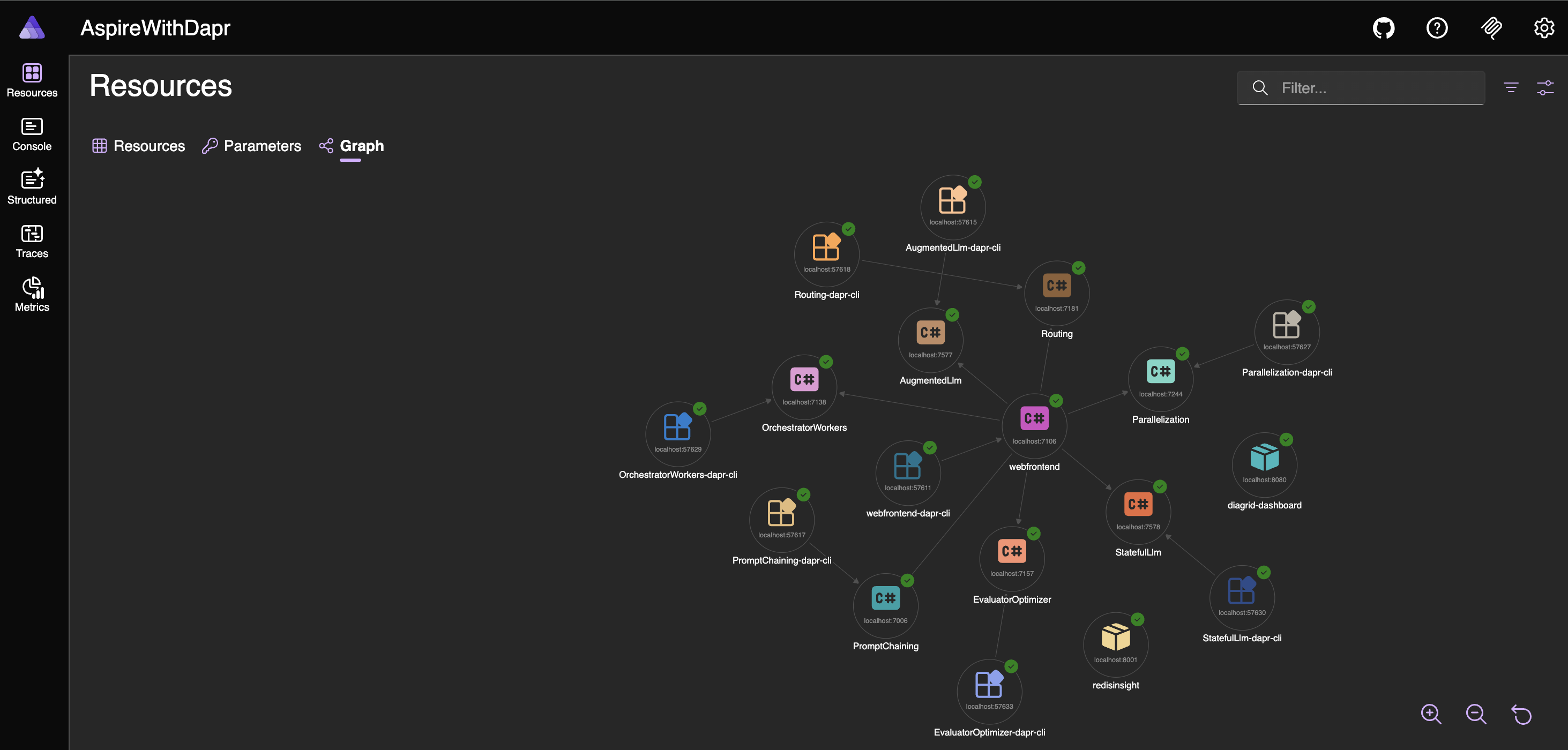

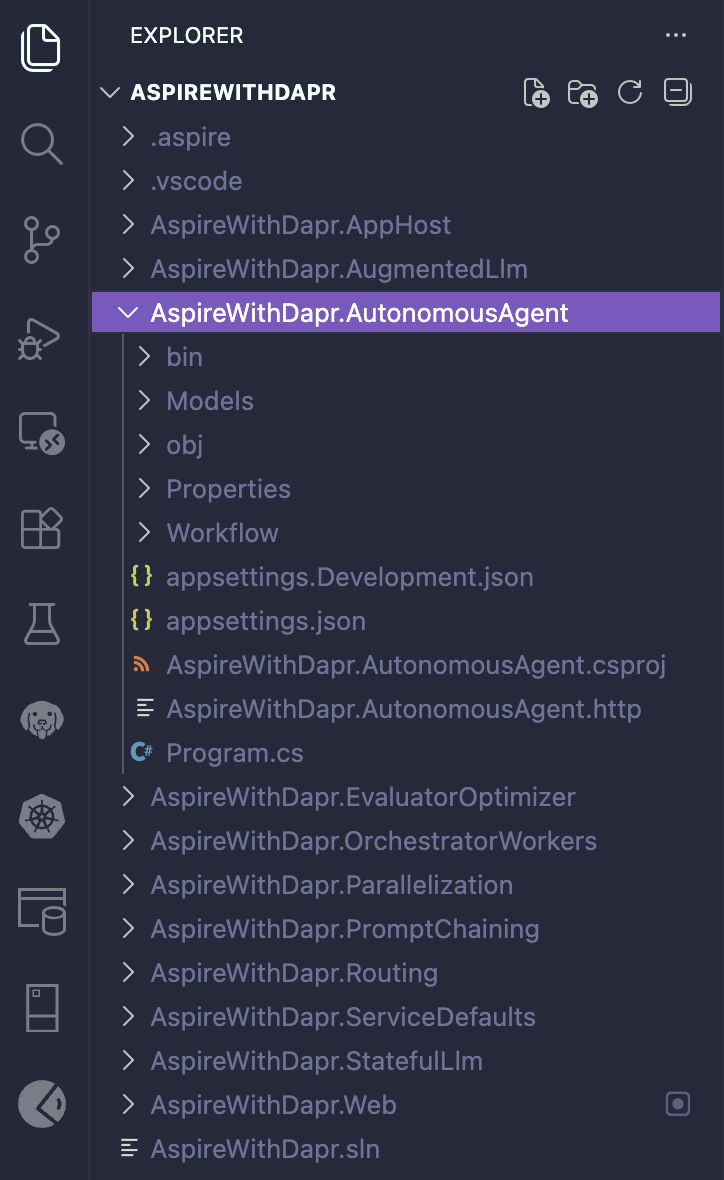

This blog is a continuation of my previous blog, Dapr - Workflow & .NET Aspire, which introduced the concepts of Prompt Chaining, Routing and Parallelization. In this blog, the focus shifts to advanced agentic patterns—including Orchestrator-Workers, Evaluator-Optimizer, and Autonomous Agent—demonstrating how these can be implemented using Dapr Workflow and the Dapr Conversation API to build scalable, stateful, and modular LLM pipelines in distributed .NET applications.

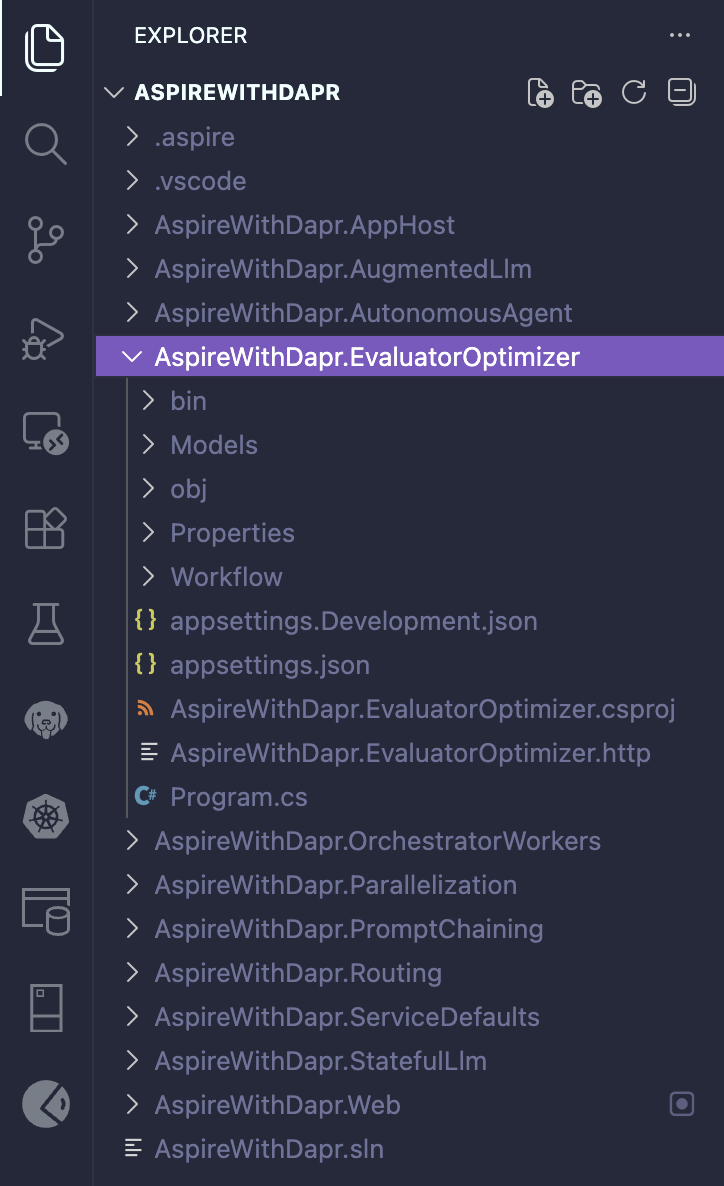

Prerequisties & Solution Structure

- Refer to the earlier blog post, Dapr - Workflow & .NET Aspire

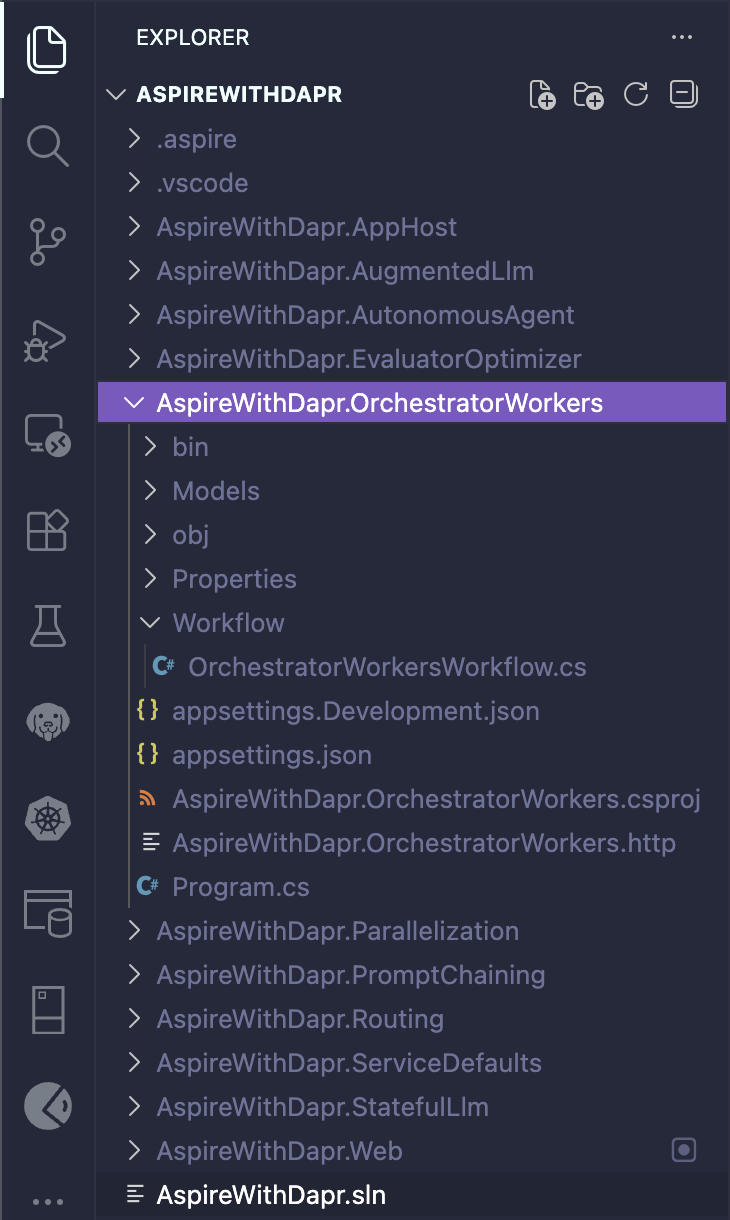

Changes in AppHost

The OrchestratorWorkers project (a new Minimal API) has been added, and the web frontend has been updated to reference it.

// AppHost.cs

using CommunityToolkit.Aspire.Hosting.Dapr;

var builder = DistributedApplication.CreateBuilder(args);

// Diagrid Dashboard for Dapr Workflow Visualization

var diagridDashboard = builder.AddContainer("diagrid-dashboard", "ghcr.io/diagridio/diagrid-dashboard", "latest")

.WithHttpEndpoint(8080, 8080, name: "dashboard");

// Add RedisInsight GUI for Redis data visualization

var redisInsight = builder.AddContainer("redisinsight", "redislabs/redisinsight", "latest")

.WithHttpEndpoint(8001, 8001)

.WithEnvironment("RI_APP_PORT", "8001")

.WithEnvironment("RI_HOST", "0.0.0.0")

.WithBindMount(Path.Combine(builder.AppHostDirectory, "redisinsight-data"), "/data");

// Pattern - 1. AugmentedLlm

var augmentedLlmService = builder.AddProject<Projects.AspireWithDapr_AugmentedLlm>("AugmentedLlm")

.WithHttpHealthCheck("/health")

.WithDaprSidecar(new DaprSidecarOptions

{

ResourcesPaths = ["components"],

Config = "components/daprConfig.yaml",

EnableApiLogging = false

})

.WithEnvironment("DAPR_HOST_IP", "127.0.0.1")

.WithEnvironment("ZIPKIN_ENDPOINT", "http://localhost:9411/api/v2/spans");

// Pattern - 1.1 StatefulLlm

var statefulLlmService = builder.AddProject<Projects.AspireWithDapr_StatefulLlm>("StatefulLlm")

.WithExternalHttpEndpoints()

.WithHttpHealthCheck("/health")

.WithDaprSidecar(new DaprSidecarOptions

{

ResourcesPaths = ["components"],

Config = "components/daprConfig.yaml",

EnableApiLogging = false

})

.WithEnvironment("DAPR_HOST_IP", "127.0.0.1")

.WithEnvironment("ZIPKIN_ENDPOINT", "http://localhost:9411/api/v2/spans");

// Pattern - 2. PromptChaining

var promptChainingService = builder.AddProject<Projects.AspireWithDapr_PromptChaining>("PromptChaining")

.WithExternalHttpEndpoints()

.WithHttpHealthCheck("/health")

.WithDaprSidecar(new DaprSidecarOptions

{

ResourcesPaths = ["components"],

Config = "components/daprConfig.yaml",

EnableApiLogging = false

})

.WithEnvironment("DAPR_HOST_IP", "127.0.0.1")

.WithEnvironment("ZIPKIN_ENDPOINT", "http://localhost:9411/api/v2/spans");

// Pattern - 3. Routing

var routingService = builder.AddProject<Projects.AspireWithDapr_Routing>("Routing")

.WithExternalHttpEndpoints()

.WithHttpHealthCheck("/health")

.WithDaprSidecar(new DaprSidecarOptions

{

ResourcesPaths = ["components"],

Config = "components/daprConfig.yaml"

})

.WithEnvironment("DAPR_HOST_IP", "127.0.0.1")

.WithEnvironment("ZIPKIN_ENDPOINT", "http://localhost:9411/api/v2/spans");

// Pattern - 4. Parallelization

var parallelizationService = builder.AddProject<Projects.AspireWithDapr_Parallelization>("Parallelization")

.WithExternalHttpEndpoints()

.WithHttpHealthCheck("/health")

.WithDaprSidecar(new DaprSidecarOptions

{

ResourcesPaths = ["components"],

Config = "components/daprConfig.yaml"

})

.WithEnvironment("DAPR_HOST_IP", "127.0.0.1")

.WithEnvironment("ZIPKIN_ENDPOINT", "http://localhost:9411/api/v2/spans");

// Pattern - 5. OrchestratorWorkers

var orchestratorWorkersService = builder.AddProject<Projects.AspireWithDapr_OrchestratorWorkers>("OrchestratorWorkers")

.WithExternalHttpEndpoints()

.WithHttpHealthCheck("/health")

.WithDaprSidecar(new DaprSidecarOptions

{

ResourcesPaths = ["components"],

Config = "components/daprConfig.yaml"

})

.WithEnvironment("DAPR_HOST_IP", "127.0.0.1")

.WithEnvironment("ZIPKIN_ENDPOINT", "http://localhost:9411/api/v2/spans");

// Web Frontend

builder.AddProject<Projects.AspireWithDapr_Web>("webfrontend")

.WithExternalHttpEndpoints()

.WithHttpHealthCheck("/health")

.WithReference(augmentedLlmService)

.WaitFor(augmentedLlmService)

.WithReference(statefulLlmService)

.WaitFor(statefulLlmService)

.WithReference(promptChainingService)

.WaitFor(promptChainingService)

.WithReference(routingService)

.WaitFor(routingService)

.WithReference(parallelizationService)

.WaitFor(parallelizationService)

.WithReference(orchestratorWorkersService)

.WithDaprSidecar(new DaprSidecarOptions

{

ResourcesPaths = ["components"],

Config = "components/daprConfig.yaml"

})

.WithEnvironment("DAPR_HOST_IP", "127.0.0.1")

.WithEnvironment("ZIPKIN_ENDPOINT", "http://localhost:9411/api/v2/spans");

builder.Build().Run();

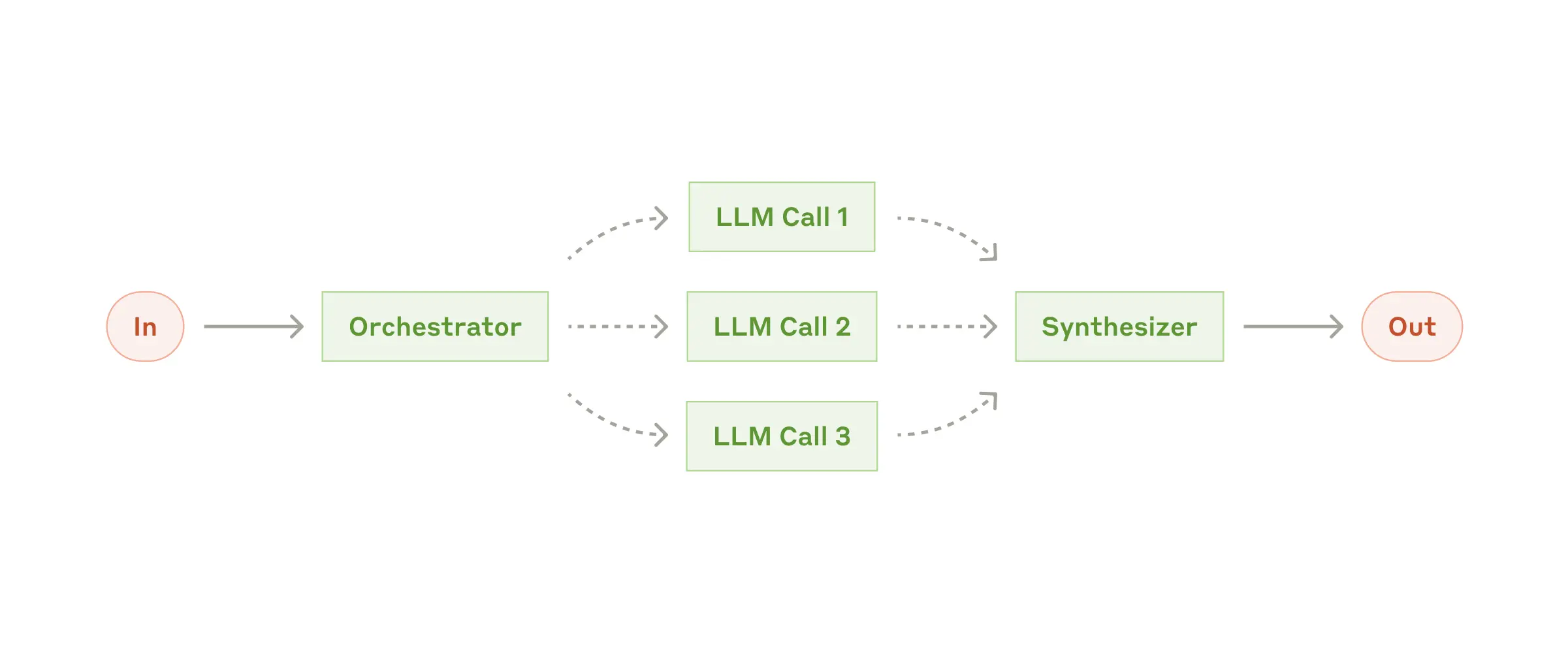

Orchestrator-Workers

The orchestrator–workers workflow centers on a primary LLM that dynamically decomposes tasks, delegates execution to worker LLMs, and synthesizes the outcomes.

dotnet add package Dapr.AI

dotnet add package Dapr.Workflow// LlmModels.cs

using System.ComponentModel.DataAnnotations;

namespace AspireWithDapr.OrchestratorWorkers.Models;

public record LlmRequest(

[Required] [MinLength(1)] string Prompt,

Dictionary<string, object>? Context = null,

string? Model = null,

double? Temperature = null);

public record LlmResponse(

string Response,

Dictionary<string, object>? Metadata = null);

public record OrchestratorRequest(

[Required] [MinLength(1)] string Prompt,

int WorkerCount = 3,

Dictionary<string, object>? Context = null,

string? Model = null,

double? Temperature = null);

public record Subtask(

string Id,

string Description,

string Type);

public record WorkerTask(

Subtask Subtask,

string? Model = null,

double? Temperature = null);

public record WorkerResult(

string SubtaskId,

string Output,

double Confidence);// Program.cs

#pragma warning disable DAPR_CONVERSATION

using System.ComponentModel.DataAnnotations;

using Dapr.AI.Conversation.Extensions;

using Dapr.Workflow;

using AspireWithDapr.OrchestratorWorkers.Models;

using AspireWithDapr.OrchestratorWorkers.Workflow;

var builder = WebApplication.CreateBuilder(args);

builder.AddServiceDefaults();

builder.Services.AddProblemDetails();

builder.Services.AddOpenApi();

builder.Services.AddDaprConversationClient();

builder.Services.AddDaprWorkflow(options =>

{

options.RegisterWorkflow<OrchestratorWorkersWorkflow>();

options.RegisterActivity<BreakdownActivity>();

options.RegisterActivity<WorkerActivity>();

options.RegisterActivity<AggregateActivity>();

options.RegisterActivity<LoggingActivity>();

});

var app = builder.Build();

// Initialize workflow logger with the app's logger factory

var loggerFactory = app.Services.GetRequiredService<ILoggerFactory>();

OrchestratorWorkersWorkflow.SetLogger(loggerFactory);

app.UseExceptionHandler();

if (app.Environment.IsDevelopment())

{

app.MapOpenApi();

}

app.MapGet("/", () => Results.Ok(new {

service = "Orchestrator-Workers LLM API",

version = "1.0.0",

endpoints = new[] { "/llm/query", "/llm/query/{instanceId}", "/llm/query/{instanceId}/status", "/health" },

description = "Orchestrator dynamically breaks down tasks, delegates to worker LLMs, and synthesizes results"

}))

.WithName("GetServiceInfo")

.WithTags("Info");

app.MapPost("/llm/query", async (

[Required] OrchestratorRequest request,

DaprWorkflowClient workflowClient,

ILogger<Program> logger,

CancellationToken cancellationToken) =>

{

if (string.IsNullOrWhiteSpace(request.Prompt))

{

return Results.BadRequest(new { error = "Prompt is required and cannot be empty." });

}

if (request.WorkerCount < 1 || request.WorkerCount > 10)

{

return Results.BadRequest(new { error = "WorkerCount must be between 1 and 10." });

}

try

{

logger.LogInformation("Starting Orchestrator-Workers workflow for prompt: {Prompt} with length {PromptLength} characters | Worker Count: {WorkerCount}",

request.Prompt, request.Prompt.Length, request.WorkerCount);

var instanceId = Guid.NewGuid().ToString();

await workflowClient.ScheduleNewWorkflowAsync(

nameof(OrchestratorWorkersWorkflow),

instanceId,

request);

logger.LogInformation("Workflow started with instance ID: {InstanceId}", instanceId);

return Results.Accepted($"/llm/query/{instanceId}", new {

instanceId,

status = "started"

});

}

catch (Exception ex)

{

logger.LogError(ex, "Error starting workflow");

return Results.Problem(

detail: "An error occurred while starting the workflow.",

statusCode: StatusCodes.Status500InternalServerError);

}

})

.WithName("StartOrchestratorWorkersQuery")

.WithTags("LLM")

.Produces<LlmResponse>(StatusCodes.Status202Accepted)

.Produces(StatusCodes.Status400BadRequest)

.Produces(StatusCodes.Status500InternalServerError);

app.MapGet("/llm/query/{instanceId}", async (

string instanceId,

DaprWorkflowClient workflowClient,

ILogger<Program> logger,

CancellationToken cancellationToken) =>

{

try

{

var workflowState = await workflowClient.GetWorkflowStateAsync(instanceId);

if (workflowState is null)

{

return Results.NotFound(new { error = $"Workflow instance {instanceId} not found." });

}

if (workflowState.RuntimeStatus != WorkflowRuntimeStatus.Completed)

{

return Results.Accepted($"/llm/query/{instanceId}", new

{

instanceId,

runtimeStatus = workflowState.RuntimeStatus.ToString()

});

}

var output = workflowState.ReadOutputAs<LlmResponse>();

return Results.Ok(new

{

instanceId,

runtimeStatus = workflowState.RuntimeStatus.ToString(),

result = output

});

}

catch (Exception ex)

{

logger.LogError(ex, "Error retrieving workflow state for instance {InstanceId}", instanceId);

return Results.Problem(

detail: "An error occurred while retrieving the workflow state.",

statusCode: StatusCodes.Status500InternalServerError);

}

})

.WithName("GetOrchestratorWorkersQueryResult")

.WithTags("LLM")

.Produces<LlmResponse>()

.Produces(StatusCodes.Status404NotFound)

.Produces(StatusCodes.Status500InternalServerError);

app.MapGet("/llm/query/{instanceId}/status", async (

string instanceId,

DaprWorkflowClient workflowClient,

ILogger<Program> logger,

CancellationToken cancellationToken) =>

{

try

{

var workflowState = await workflowClient.GetWorkflowStateAsync(instanceId);

if (workflowState is null)

{

return Results.NotFound(new { error = $"Workflow instance {instanceId} not found." });

}

var status = workflowState.RuntimeStatus;

return Results.Ok(new

{

instanceId,

runtimeStatus = status.ToString(),

isCompleted = status == WorkflowRuntimeStatus.Completed,

isRunning = status == WorkflowRuntimeStatus.Running,

isFailed = status == WorkflowRuntimeStatus.Failed,

isTerminated = status == WorkflowRuntimeStatus.Terminated,

isPending = status == WorkflowRuntimeStatus.Pending

});

}

catch (Exception ex)

{

logger.LogError(ex, "Error retrieving workflow status for instance {InstanceId}", instanceId);

return Results.Problem(

detail: "An error occurred while retrieving the workflow status.",

statusCode: StatusCodes.Status500InternalServerError);

}

})

.WithName("GetOrchestratorWorkersWorkflowStatus")

.WithTags("LLM")

.Produces(StatusCodes.Status200OK)

.Produces(StatusCodes.Status404NotFound)

.Produces(StatusCodes.Status500InternalServerError);

app.MapDefaultEndpoints();

app.Run();// OrchestratorWorkersWorkflow

#pragma warning disable DAPR_CONVERSATION

using Dapr.Workflow;

using Dapr.AI.Conversation;

using Dapr.AI.Conversation.ConversationRoles;

using AspireWithDapr.OrchestratorWorkers.Models;

using System.Text.Json;

namespace AspireWithDapr.OrchestratorWorkers.Workflow;

public class OrchestratorWorkersWorkflow : Workflow<OrchestratorRequest, LlmResponse>

{

private static ILogger? _logger;

public static void SetLogger(ILoggerFactory loggerFactory)

{

_logger = loggerFactory.CreateLogger<OrchestratorWorkersWorkflow>();

}

public override async Task<LlmResponse> RunAsync(

WorkflowContext context,

OrchestratorRequest request)

{

ArgumentNullException.ThrowIfNull(request);

ArgumentException.ThrowIfNullOrWhiteSpace(request.Prompt);

// Create logger if not set, using a default factory as fallback

var logger = _logger ?? LoggerFactory.Create(builder => builder.AddConsole()).CreateLogger<OrchestratorWorkersWorkflow>();

var workflowInstanceId = context.InstanceId;

// Log workflow start using activity to ensure it's captured

var promptPreview = request.Prompt.Length > 100 ? request.Prompt[..100] + "..." : request.Prompt;

await context.CallActivityAsync<bool>(

nameof(LoggingActivity),

new LogMessage($"[Workflow Start] InstanceId: {workflowInstanceId} | Prompt: \"{promptPreview}\" | Prompt Length: {request.Prompt.Length} | Worker Count: {request.WorkerCount} | Model: {request.Model ?? "llama"} | Temperature: {request.Temperature ?? 0.7}"));

// Log full initial prompt for end-to-end tracking

await context.CallActivityAsync<bool>(

nameof(LoggingActivity),

new LogMessage($"[Initial Prompt] InstanceId: {workflowInstanceId}\n---\n{request.Prompt}\n---"));

context.SetCustomStatus(new { step = "breaking_down_task" });

// Step 1: Break down main task into subtasks

await context.CallActivityAsync<bool>(

nameof(LoggingActivity),

new LogMessage($"[Step 1: Breakdown] InstanceId: {workflowInstanceId} | Worker Count: {request.WorkerCount}"));

List<Subtask>? subtasks = null;

try

{

subtasks = await context.CallActivityAsync<List<Subtask>>(

nameof(BreakdownActivity),

new BreakdownRequest(request.Prompt, request.WorkerCount, request.Model, request.Temperature));

await context.CallActivityAsync<bool>(

nameof(LoggingActivity),

new LogMessage($"[Step 1: Breakdown Complete] InstanceId: {workflowInstanceId} | Subtasks Generated: {subtasks?.Count ?? 0}"));

}

catch (Exception ex)

{

logger.LogError(ex,

"[Step 1: Breakdown Failed] InstanceId: {InstanceId} | Error: {Error}",

workflowInstanceId,

ex.Message);

throw;

}

if (subtasks is null || subtasks.Count == 0)

{

logger.LogWarning(

"[Workflow Early Exit] InstanceId: {InstanceId} | Reason: No subtasks generated",

workflowInstanceId);

context.SetCustomStatus(new { step = "completed", warning = "no_subtasks_generated" });

return new LlmResponse(

"Unable to break down the task into subtasks.",

new Dictionary<string, object>

{

["workers_used"] = 0,

["workflow_type"] = "orchestrator_workers"

});

}

if (subtasks.Count > 0)

{

logger.LogDebug(

"[Step 1: Breakdown Output] InstanceId: {InstanceId} | Subtasks: {Subtasks}",

workflowInstanceId,

JsonSerializer.Serialize(subtasks.Select(s => new { s.Id, s.Type, DescriptionLength = s.Description.Length })));

}

context.SetCustomStatus(new { step = "distributing_to_workers", subtask_count = subtasks.Count });

// Step 2: Distribute subtasks to worker activities

await context.CallActivityAsync<bool>(

nameof(LoggingActivity),

new LogMessage($"[Step 2: DistributeToWorkers] InstanceId: {workflowInstanceId} | Subtask Count: {subtasks.Count}"));

List<WorkerResult>? workerResults = null;

try

{

var workerTasks = subtasks.Select(subtask =>

context.CallActivityAsync<WorkerResult>(

nameof(WorkerActivity),

new WorkerTask(subtask, request.Model, request.Temperature)));

var results = await Task.WhenAll(workerTasks);

workerResults = results.ToList();

await context.CallActivityAsync<bool>(

nameof(LoggingActivity),

new LogMessage($"[Step 2: DistributeToWorkers Complete] InstanceId: {workflowInstanceId} | Worker Results Count: {workerResults.Count}"));

}

catch (Exception ex)

{

logger.LogError(ex,

"[Step 2: DistributeToWorkers Failed] InstanceId: {InstanceId} | Error: {Error}",

workflowInstanceId,

ex.Message);

throw;

}

if (workerResults is null || workerResults.Count == 0)

{

logger.LogWarning(

"[Workflow Early Exit] InstanceId: {InstanceId} | Reason: No worker results",

workflowInstanceId);

context.SetCustomStatus(new { step = "completed", warning = "no_worker_results" });

return new LlmResponse(

"Worker execution completed but no results were generated.",

new Dictionary<string, object>

{

["workers_used"] = subtasks.Count,

["results_count"] = 0,

["workflow_type"] = "orchestrator_workers"

});

}

if (workerResults.Count > 0)

{

logger.LogDebug(

"[Step 2: DistributeToWorkers Output] InstanceId: {InstanceId} | Worker Results: {Results}",

workflowInstanceId,

JsonSerializer.Serialize(workerResults.Select(r => new

{

r.SubtaskId,

OutputLength = r.Output?.Length ?? 0,

r.Confidence

})));

}

context.SetCustomStatus(new { step = "aggregating_results" });

// Step 3: Aggregate worker results

await context.CallActivityAsync<bool>(

nameof(LoggingActivity),

new LogMessage($"[Step 3: Aggregate] InstanceId: {workflowInstanceId} | Worker Results Count: {workerResults.Count} | Original Prompt: \"{promptPreview}\""));

LlmResponse? aggregated = null;

try

{

aggregated = await context.CallActivityAsync<LlmResponse>(

nameof(AggregateActivity),

new AggregateRequest(

request.Prompt,

workerResults,

subtasks,

request.Model,

request.Temperature));

var aggregatedPreview = aggregated?.Response?.Length > 150 ? aggregated.Response[..150] + "..." : aggregated?.Response;

await context.CallActivityAsync<bool>(

nameof(LoggingActivity),

new LogMessage($"[Step 3: Aggregate Complete] InstanceId: {workflowInstanceId} | Aggregated: \"{aggregatedPreview}\" | Aggregated Length: {aggregated?.Response?.Length ?? 0}"));

// Log full aggregated response after completion

if (aggregated?.Response != null)

{

await context.CallActivityAsync<bool>(

nameof(LoggingActivity),

new LogMessage($"[Aggregated Response After Step 3] InstanceId: {workflowInstanceId}\n---\n{aggregated.Response}\n---"));

}

}

catch (Exception ex)

{

logger.LogError(ex,

"[Step 3: Aggregate Failed] InstanceId: {InstanceId} | Error: {Error}",

workflowInstanceId,

ex.Message);

throw;

}

logger.LogDebug(

"[Step 3: Aggregate Output] InstanceId: {InstanceId} | Aggregated Preview: {Preview}",

workflowInstanceId,

aggregated?.Response?.Length > 200 ? aggregated.Response[..200] + "..." : aggregated?.Response);

context.SetCustomStatus(new { step = "completed" });

// Enhance metadata with orchestrator-workers information

var baseMetadata = aggregated?.Metadata ?? new Dictionary<string, object>();

var enhancedMetadata = new Dictionary<string, object>(baseMetadata)

{

["workers_used"] = subtasks.Count,

["subtasks"] = subtasks.Select(s => s.Type).ToList(),

["workflow_type"] = "orchestrator_workers"

};

var finalResponse = aggregated! with { Metadata = enhancedMetadata };

await context.CallActivityAsync<bool>(

nameof(LoggingActivity),

new LogMessage($"[Workflow Complete] InstanceId: {workflowInstanceId} | Total Steps: 3 | Workers: {subtasks.Count} | Final Response Length: {finalResponse.Response?.Length ?? 0}"));

if (finalResponse.Metadata != null)

{

logger.LogDebug(

"[Workflow Final Metadata] InstanceId: {InstanceId} | Metadata: {Metadata}",

workflowInstanceId,

JsonSerializer.Serialize(finalResponse.Metadata));

}

if (finalResponse is null)

{

logger.LogError(

"[Workflow Error] InstanceId: {InstanceId} | Final response is null",

workflowInstanceId);

throw new InvalidOperationException("Workflow completed but final response is null.");

}

return finalResponse;

}

}

// Logging activity to ensure workflow logs are captured

public class LoggingActivity(ILogger<LoggingActivity> logger) : WorkflowActivity<LogMessage, bool>

{

public override Task<bool> RunAsync(WorkflowActivityContext context, LogMessage message)

{

try

{

switch (message.Level.ToLower())

{

case "error":

logger.LogError("[Workflow] {Message}", message.Message);

break;

case "warning":

logger.LogWarning("[Workflow] {Message}", message.Message);

break;

case "debug":

logger.LogDebug("[Workflow] {Message}", message.Message);

break;

default:

logger.LogInformation("[Workflow] {Message}", message.Message);

break;

}

return Task.FromResult(true);

}

catch (Exception ex)

{

logger.LogError(ex, "[LoggingActivity] Error logging message: {Message}", message.Message);

return Task.FromResult(false);

}

}

}

public record LogMessage(string Message, string Level = "Information");

// Activity to break down main task into subtasks

public class BreakdownActivity(

DaprConversationClient conversationClient,

ILogger<BreakdownActivity> logger) : WorkflowActivity<BreakdownRequest, List<Subtask>>

{

public override async Task<List<Subtask>> RunAsync(

WorkflowActivityContext context,

BreakdownRequest request)

{

ArgumentNullException.ThrowIfNull(conversationClient);

ArgumentNullException.ThrowIfNull(request);

ArgumentException.ThrowIfNullOrWhiteSpace(request.Prompt);

var activityName = nameof(BreakdownActivity);

var startTime = DateTime.UtcNow;

var componentName = request.Model ?? "llama";

var promptPreview = request.Prompt.Length > 100 ? request.Prompt[..100] + "..." : request.Prompt;

logger.LogInformation(

"[{Activity} Start] Prompt: \"{Prompt}\" | Prompt Length: {PromptLength} | Worker Count: {WorkerCount} | Model: {Model} | Temperature: {Temperature}",

activityName,

promptPreview,

request.Prompt.Length,

request.WorkerCount,

componentName,

request.Temperature ?? 0.5);

logger.LogDebug(

"[{Activity} Input] Full Request: {Request}",

activityName,

JsonSerializer.Serialize(new

{

PromptLength = request.Prompt.Length,

PromptPreview = request.Prompt.Length > 200 ? request.Prompt[..200] + "..." : request.Prompt,

request.WorkerCount,

request.Model,

request.Temperature

}));

var breakdownPrompt = $"""

Break down this task into {request.WorkerCount} specialized subtasks:

"{request.Prompt}"

Return each subtask on a new line in the format: "ID|Description|Type"

Types can be: research, analysis, synthesis, validation, creative

Example format:

task1|Research the topic thoroughly|research

task2|Analyze the findings|analysis

""";

var conversationInput = new ConversationInput(

[

new UserMessage

{

Content = [new MessageContent(breakdownPrompt)]

}

]);

var conversationOptions = new ConversationOptions(componentName)

{

Temperature = request.Temperature ?? 0.5

};

logger.LogDebug(

"[{Activity}] Calling LLM conversation API with component: {Component}",

activityName,

componentName);

var response = await conversationClient.ConverseAsync(

[conversationInput],

conversationOptions,

CancellationToken.None);

if (response?.Outputs is null || response.Outputs.Count == 0)

{

logger.LogError(

"[{Activity} Error] LLM returned no outputs. Response: {Response}",

activityName,

response != null ? JsonSerializer.Serialize(response) : "null");

throw new InvalidOperationException("LLM returned no outputs for task breakdown.");

}

logger.LogDebug(

"[{Activity}] Received {OutputCount} output(s) from LLM",

activityName,

response.Outputs.Count);

var responseText = string.Empty;

var choiceCount = 0;

foreach (var output in response.Outputs)

{

if (output?.Choices is null) continue;

foreach (var choice in output.Choices)

{

var content = choice.Message?.Content ?? string.Empty;

responseText += content;

choiceCount++;

}

}

if (string.IsNullOrWhiteSpace(responseText))

{

logger.LogError(

"[{Activity} Error] LLM returned empty response. Outputs Count: {OutputCount}, Choices Processed: {ChoiceCount}",

activityName,

response.Outputs.Count,

choiceCount);

throw new InvalidOperationException("LLM returned empty response for task breakdown.");

}

// Parse subtasks from response

var subtasks = new List<Subtask>();

var lines = responseText.Split('\n', StringSplitOptions.RemoveEmptyEntries);

foreach (var line in lines)

{

var trimmedLine = line.Trim();

if (string.IsNullOrWhiteSpace(trimmedLine)) continue;

var parts = trimmedLine.Split('|', 3);

if (parts.Length >= 3)

{

var id = parts[0].Trim();

var description = parts[1].Trim();

var type = parts[2].Trim();

if (!string.IsNullOrWhiteSpace(id) && !string.IsNullOrWhiteSpace(description))

{

subtasks.Add(new Subtask(id, description, type));

}

}

}

// Ensure we have at least the requested number of subtasks

while (subtasks.Count < request.WorkerCount && subtasks.Count < 10) // Cap at 10 to avoid infinite loop

{

var index = subtasks.Count + 1;

subtasks.Add(new Subtask(

$"task{index}",

$"{request.Prompt} (Subtask {index})",

"general"));

}

// Limit to requested worker count

subtasks = subtasks.Take(request.WorkerCount).ToList();

var duration = (DateTime.UtcNow - startTime).TotalMilliseconds;

logger.LogInformation(

"[{Activity} Complete] Duration: {Duration}ms | Subtasks Generated: {Count} | Choices: {ChoiceCount} | ConversationId: {ConversationId}",

activityName,

duration,

subtasks.Count,

choiceCount,

response.ConversationId ?? "none");

logger.LogDebug(

"[{Activity} Output] Subtasks: {Subtasks}",

activityName,

JsonSerializer.Serialize(subtasks.Select(s => new { s.Id, s.Type, DescriptionLength = s.Description.Length })));

return subtasks;

}

}

// Activity to execute worker task (combines research and analysis)

public class WorkerActivity(

DaprConversationClient conversationClient,

ILogger<WorkerActivity> logger) : WorkflowActivity<WorkerTask, WorkerResult>

{

public override async Task<WorkerResult> RunAsync(

WorkflowActivityContext context,

WorkerTask input)

{

ArgumentNullException.ThrowIfNull(conversationClient);

ArgumentNullException.ThrowIfNull(input);

ArgumentNullException.ThrowIfNull(input.Subtask);

var activityName = nameof(WorkerActivity);

var startTime = DateTime.UtcNow;

var componentName = input.Model ?? "llama";

logger.LogInformation(

"[{Activity} Start] Subtask ID: {SubtaskId} | Type: {Type} | Description: \"{Description}\" | Model: {Model}",

activityName,

input.Subtask.Id,

input.Subtask.Type,

input.Subtask.Description.Length > 100 ? input.Subtask.Description[..100] + "..." : input.Subtask.Description,

componentName);

// Step 1: Worker-specific research

string? research = null;

try

{

var researchPrompt = $"""

As a {input.Subtask.Type} specialist, research this subtask:

"{input.Subtask.Description}"

Provide comprehensive research notes.

""";

var researchInput = new ConversationInput(

[

new UserMessage

{

Content = [new MessageContent(researchPrompt)]

}

]);

var researchOptions = new ConversationOptions(componentName)

{

Temperature = input.Temperature ?? 0.6

};

logger.LogDebug(

"[{Activity}] Calling LLM for research with component: {Component}",

activityName,

componentName);

var researchResponse = await conversationClient.ConverseAsync(

[researchInput],

researchOptions,

CancellationToken.None);

if (researchResponse?.Outputs != null && researchResponse.Outputs.Count > 0)

{

foreach (var output in researchResponse.Outputs)

{

if (output?.Choices is null) continue;

foreach (var choice in output.Choices)

{

research += choice.Message?.Content ?? string.Empty;

}

}

}

if (string.IsNullOrWhiteSpace(research))

{

research = "No research data available.";

}

logger.LogDebug(

"[{Activity}] Research Complete | Research Length: {Length}",

activityName,

research?.Length ?? 0);

}

catch (Exception ex)

{

logger.LogWarning(ex,

"[{Activity}] Research failed, continuing without research | Subtask ID: {SubtaskId}",

activityName,

input.Subtask.Id);

research = "No research data available.";

}

// Step 2: Worker-specific analysis

LlmResponse? analysis = null;

try

{

var analysisPrompt = $"Analyze: {input.Subtask.Description}\n\nResearch: {research}";

var analysisInput = new ConversationInput(

[

new UserMessage

{

Content = [new MessageContent(analysisPrompt)]

}

]);

var analysisOptions = new ConversationOptions(componentName)

{

Temperature = input.Temperature ?? 0.7

};

logger.LogDebug(

"[{Activity}] Calling LLM for analysis with component: {Component}",

activityName,

componentName);

var analysisResponse = await conversationClient.ConverseAsync(

[analysisInput],

analysisOptions,

CancellationToken.None);

if (analysisResponse?.Outputs is null || analysisResponse.Outputs.Count == 0)

{

logger.LogError(

"[{Activity} Error] LLM returned no outputs for analysis. Response: {Response}",

activityName,

analysisResponse != null ? JsonSerializer.Serialize(analysisResponse) : "null");

throw new InvalidOperationException("LLM returned no outputs for specialized analysis.");

}

var responseText = string.Empty;

var choiceCount = 0;

foreach (var output in analysisResponse.Outputs)

{

if (output?.Choices is null) continue;

foreach (var choice in output.Choices)

{

var content = choice.Message?.Content ?? string.Empty;

responseText += content;

choiceCount++;

}

}

if (string.IsNullOrWhiteSpace(responseText))

{

logger.LogError(

"[{Activity} Error] LLM returned empty response for analysis. Outputs Count: {OutputCount}, Choices Processed: {ChoiceCount}",

activityName,

analysisResponse.Outputs.Count,

choiceCount);

throw new InvalidOperationException("LLM returned empty response for specialized analysis.");

}

analysis = new LlmResponse(

responseText,

new Dictionary<string, object>

{

["model"] = componentName,

["temperature"] = input.Temperature ?? 0.7,

["timestamp"] = DateTime.UtcNow,

["conversationId"] = analysisResponse.ConversationId ?? string.Empty,

["type"] = input.Subtask.Type,

["confidence"] = 0.85

});

logger.LogDebug(

"[{Activity}] Analysis Complete | Analysis Length: {Length}",

activityName,

analysis?.Response?.Length ?? 0);

}

catch (Exception ex)

{

logger.LogError(ex,

"[{Activity}] Analysis failed | Subtask ID: {SubtaskId}",

activityName,

input.Subtask.Id);

throw;

}

var duration = (DateTime.UtcNow - startTime).TotalMilliseconds;

var confidence = analysis?.Metadata?.TryGetValue("confidence", out var confObj) == true && confObj is double conf

? conf

: 0.85; // Default confidence

var result = new WorkerResult(

input.Subtask.Id,

analysis?.Response ?? "No output generated",

confidence);

logger.LogInformation(

"[{Activity} Complete] Duration: {Duration}ms | Subtask ID: {SubtaskId} | Output Length: {OutputLength} | Confidence: {Confidence}",

activityName,

duration,

input.Subtask.Id,

result.Output?.Length ?? 0,

result.Confidence);

return result;

}

}

// Activity to aggregate worker results

public class AggregateActivity(

DaprConversationClient conversationClient,

ILogger<AggregateActivity> logger) : WorkflowActivity<AggregateRequest, LlmResponse>

{

public override async Task<LlmResponse> RunAsync(

WorkflowActivityContext context,

AggregateRequest request)

{

ArgumentNullException.ThrowIfNull(conversationClient);

ArgumentNullException.ThrowIfNull(request);

ArgumentNullException.ThrowIfNull(request.WorkerResults);

ArgumentNullException.ThrowIfNull(request.Subtasks);

var activityName = nameof(AggregateActivity);

var startTime = DateTime.UtcNow;

var componentName = request.Model ?? "llama";

var promptPreview = request.OriginalPrompt.Length > 100 ? request.OriginalPrompt[..100] + "..." : request.OriginalPrompt;

logger.LogInformation(

"[{Activity} Start] Original Prompt: \"{Prompt}\" | Original Prompt Length: {PromptLength} | Worker Results Count: {ResultsCount} | Subtasks Count: {SubtasksCount} | Model: {Model} | Temperature: {Temperature}",

activityName,

promptPreview,

request.OriginalPrompt.Length,

request.WorkerResults.Count,

request.Subtasks.Count,

componentName,

request.Temperature ?? 0.6);

logger.LogDebug(

"[{Activity} Input] Original Prompt: {Prompt} | Worker Results: {Results} | Subtasks: {Subtasks}",

activityName,

request.OriginalPrompt,

JsonSerializer.Serialize(request.WorkerResults.Select(r => new { r.SubtaskId, OutputLength = r.Output?.Length ?? 0, r.Confidence })),

JsonSerializer.Serialize(request.Subtasks.Select(s => new { s.Id, s.Type })));

var resultsText = string.Join("\n\n",

request.WorkerResults.Select((r, i) =>

{

var subtask = request.Subtasks.FirstOrDefault(s => s.Id == r.SubtaskId) ?? request.Subtasks[i];

return $"[Worker {i + 1} - {subtask.Type}]\nSubtask: {subtask.Description}\n\nOutput:\n{r.Output}";

}));

var aggregatePrompt = $"""

Original task: {request.OriginalPrompt}

Worker results from specialized subtasks:

{resultsText}

Synthesize all worker contributions into a comprehensive final answer.

Requirements:

1. Acknowledge each worker's contribution

2. Integrate insights from all subtasks

3. Create a unified, well-structured response

4. Maintain coherence and flow

5. Highlight how different perspectives contribute to the final answer

Synthesized Answer:

""";

var conversationInput = new ConversationInput(

[

new UserMessage

{

Content = [new MessageContent(aggregatePrompt)]

}

]);

var conversationOptions = new ConversationOptions(componentName)

{

Temperature = request.Temperature ?? 0.6

};

logger.LogDebug(

"[{Activity}] Calling LLM conversation API with component: {Component}",

activityName,

componentName);

var response = await conversationClient.ConverseAsync(

[conversationInput],

conversationOptions,

CancellationToken.None);

if (response?.Outputs is null || response.Outputs.Count == 0)

{

logger.LogError(

"[{Activity} Error] LLM returned no outputs. Response: {Response}",

activityName,

response != null ? JsonSerializer.Serialize(response) : "null");

throw new InvalidOperationException("LLM returned no outputs for aggregation.");

}

logger.LogDebug(

"[{Activity}] Received {OutputCount} output(s) from LLM",

activityName,

response.Outputs.Count);

var responseText = string.Empty;

var choiceCount = 0;

foreach (var output in response.Outputs)

{

if (output?.Choices is null) continue;

foreach (var choice in output.Choices)

{

var content = choice.Message?.Content ?? string.Empty;

responseText += content;

choiceCount++;

}

}

if (string.IsNullOrWhiteSpace(responseText))

{

logger.LogError(

"[{Activity} Error] LLM returned empty response. Outputs Count: {OutputCount}, Choices Processed: {ChoiceCount}",

activityName,

response.Outputs.Count,

choiceCount);

throw new InvalidOperationException("LLM returned empty aggregation.");

}

var duration = (DateTime.UtcNow - startTime).TotalMilliseconds;

var metadata = new Dictionary<string, object>

{

["model"] = componentName,

["temperature"] = request.Temperature ?? 0.6,

["timestamp"] = DateTime.UtcNow,

["conversationId"] = response.ConversationId ?? string.Empty,

["workers_aggregated"] = request.WorkerResults.Count,

["aggregation_method"] = "orchestrator_synthesis"

};

var result = new LlmResponse(responseText, metadata);

var aggregatedPreview = responseText.Length > 150 ? responseText[..150] + "..." : responseText;

logger.LogInformation(

"[{Activity} Complete] Duration: {Duration}ms | Aggregated: \"{Aggregated}\" | Aggregated Length: {AggregatedLength} | Choices: {ChoiceCount} | ConversationId: {ConversationId} | Workers: {WorkersCount}",

activityName,

duration,

aggregatedPreview,

responseText.Length,

choiceCount,

response.ConversationId ?? "none",

request.WorkerResults.Count);

logger.LogDebug(

"[{Activity} Output] Aggregated Preview: {Preview} | Metadata: {Metadata}",

activityName,

responseText.Length > 300 ? responseText[..300] + "..." : responseText,

JsonSerializer.Serialize(metadata));

return result;

}

}

// Request/Response models for activities

public record BreakdownRequest(

string Prompt,

int WorkerCount,

string? Model = null,

double? Temperature = null);

public record AggregateRequest(

string OriginalPrompt,

List<WorkerResult> WorkerResults,

List<Subtask> Subtasks,

string? Model = null,

double? Temperature = null);If you walk through the code, you will see that it implements a Dapr Workflow based on the Orchestrator-Workers pattern. This pattern breaks down a complex task into subtasks, distributes them to parallel workers, and aggregates the results into a unified response.

The workflow consists of three main steps (activities):

- Breakdown – Calls the LLM to decompose the user prompt into WorkerCount specialized subtasks. Each subtask has an ID, description, and type (research/analysis/synthesis/validation/creative).

- Worker – Executes a subtask in two phases: (1) Research – gathers information for the subtask, (2) Analysis – analyzes findings and produces output. Returns a WorkerResult with confidence score. Multiple workers run in parallel based on WorkerCount user input.

- Aggregate – Synthesizes all worker outputs into a unified final response.

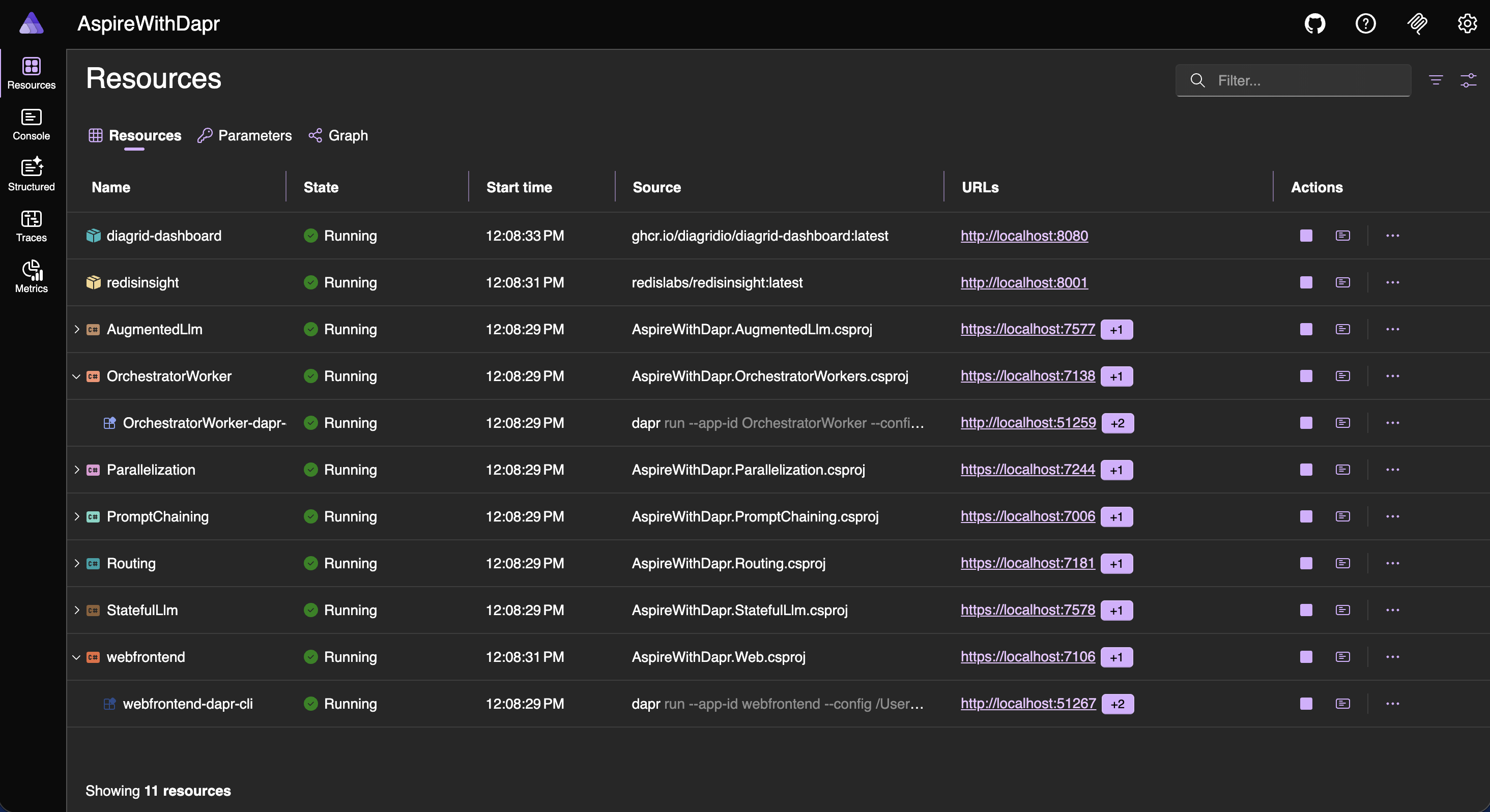

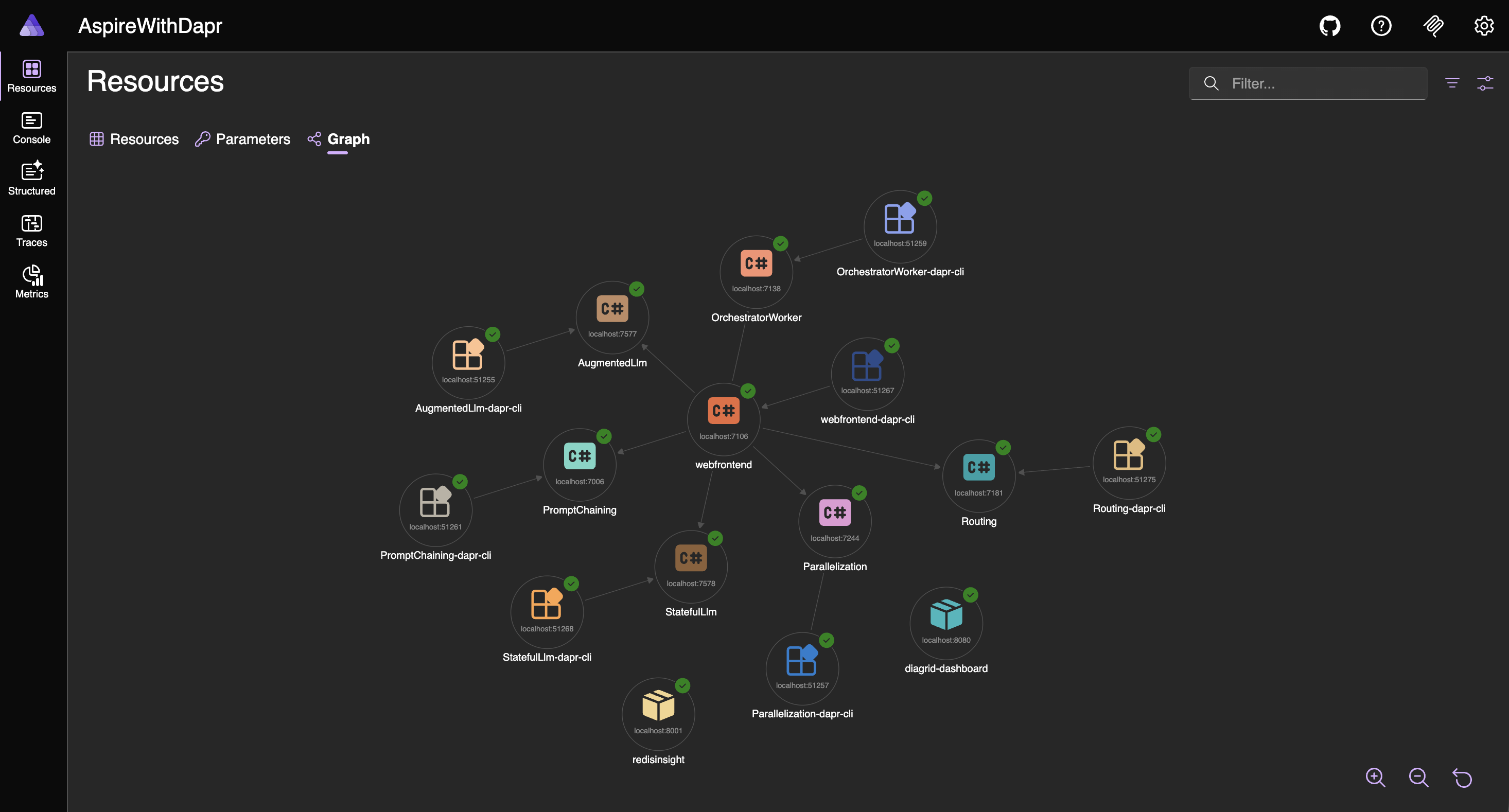

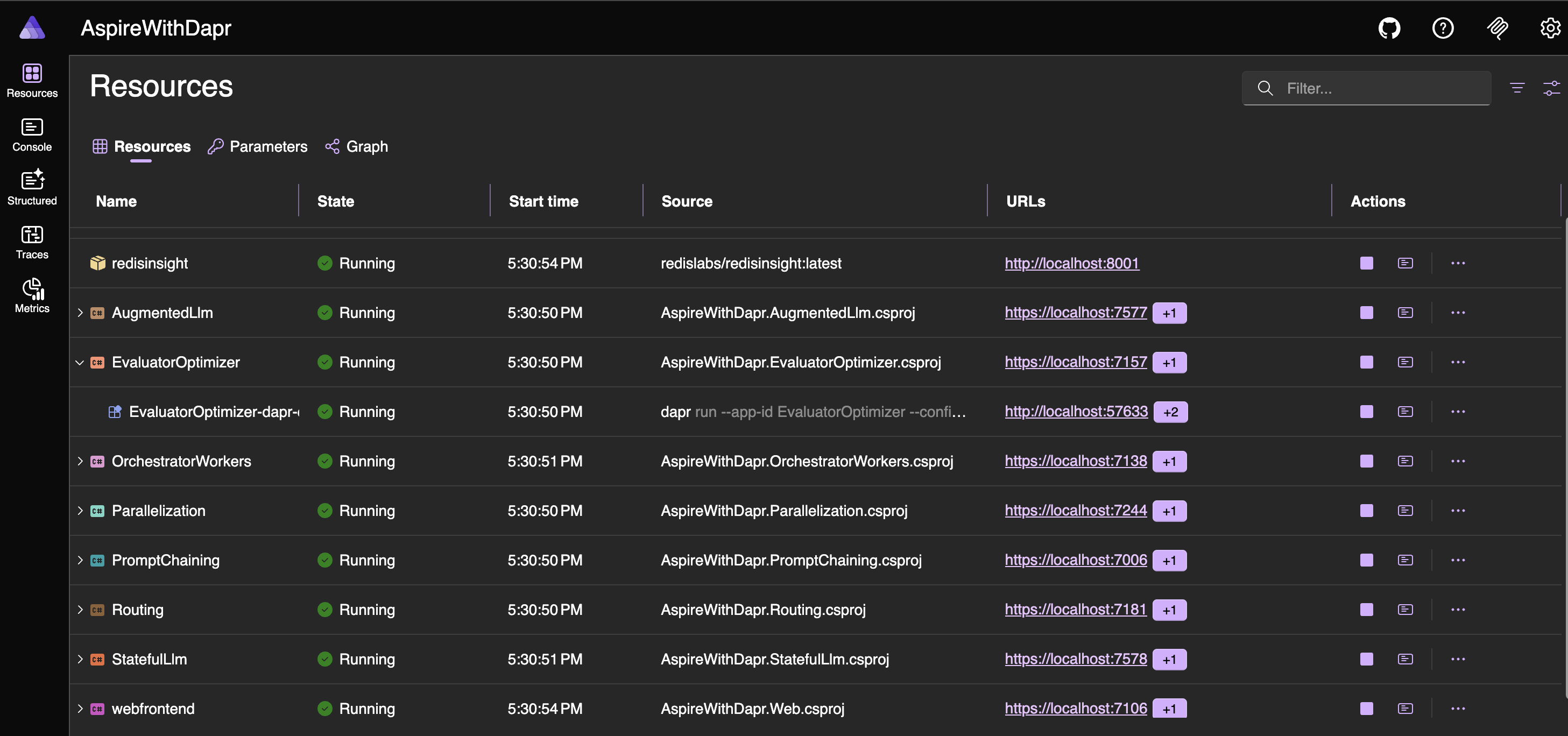

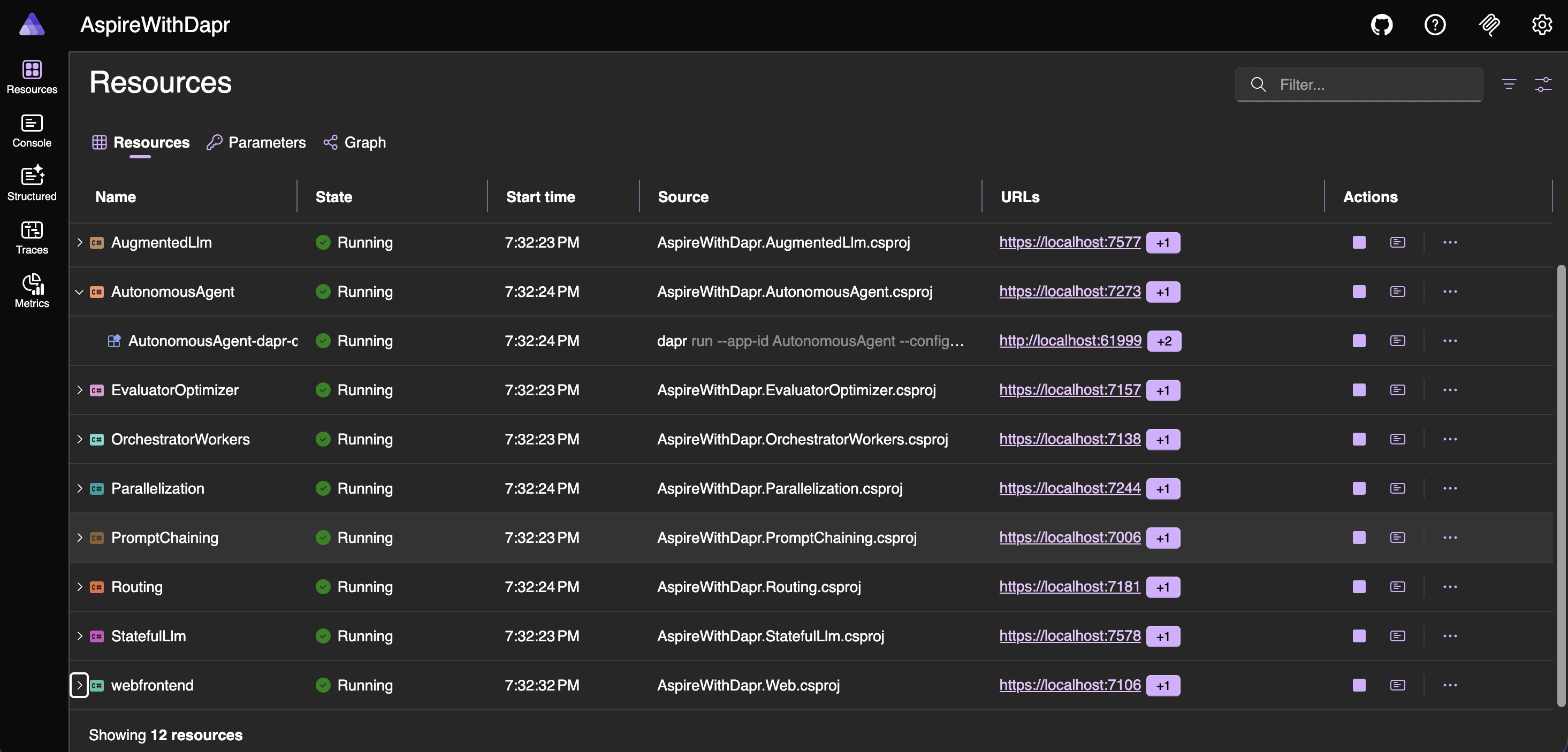

Aspire, Diagrid, Redis Insight UIs (or dashbaords)

To run the application, execute the following command.

aspire run

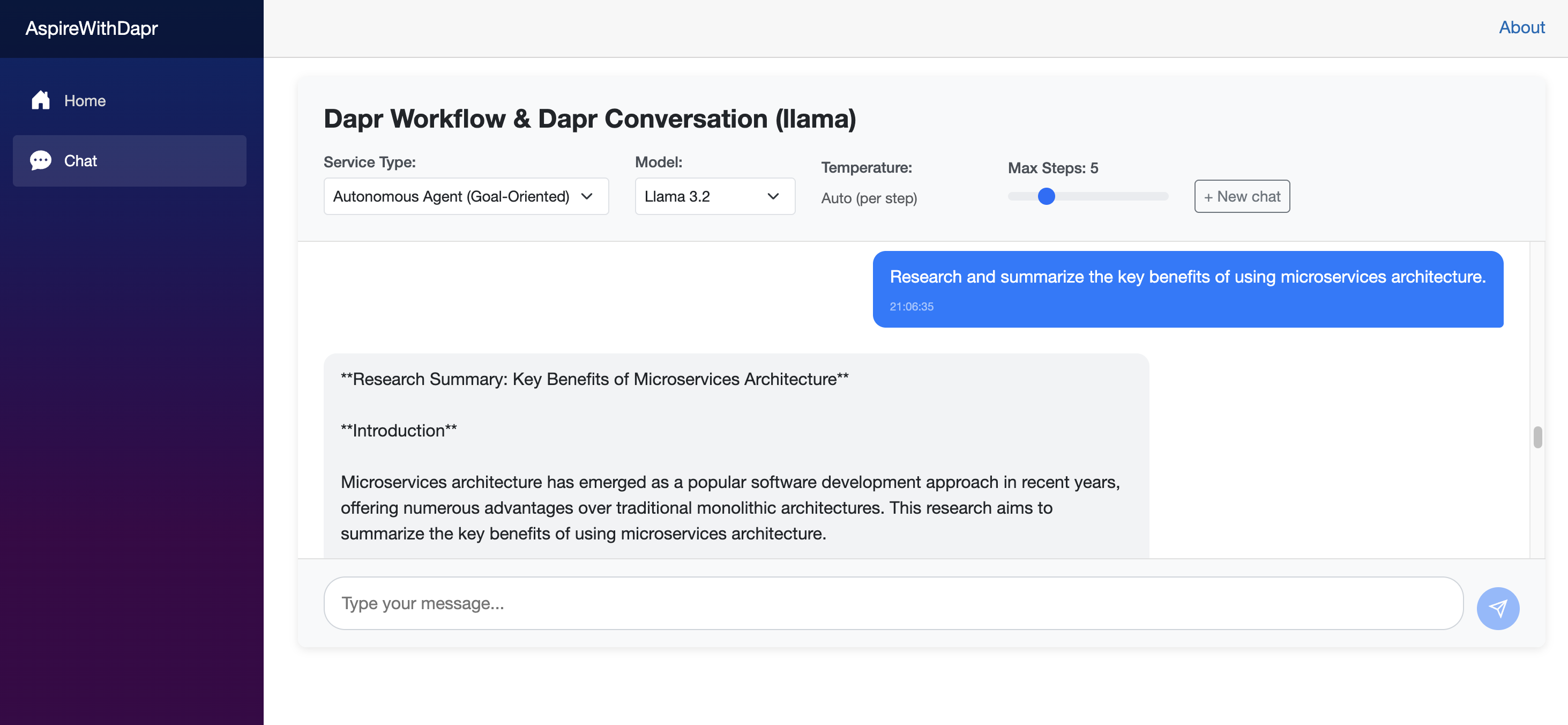

Let’s run the web application

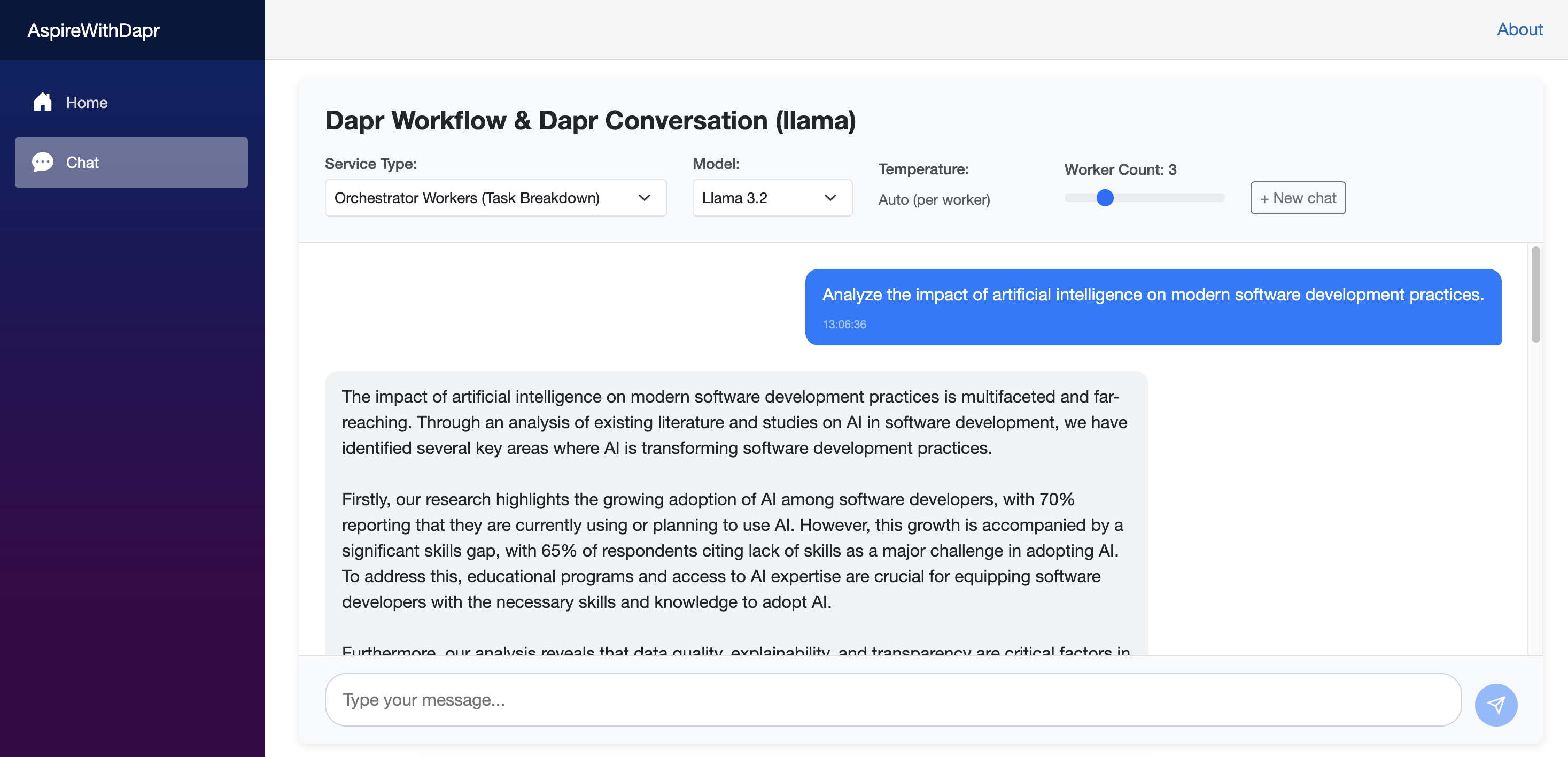

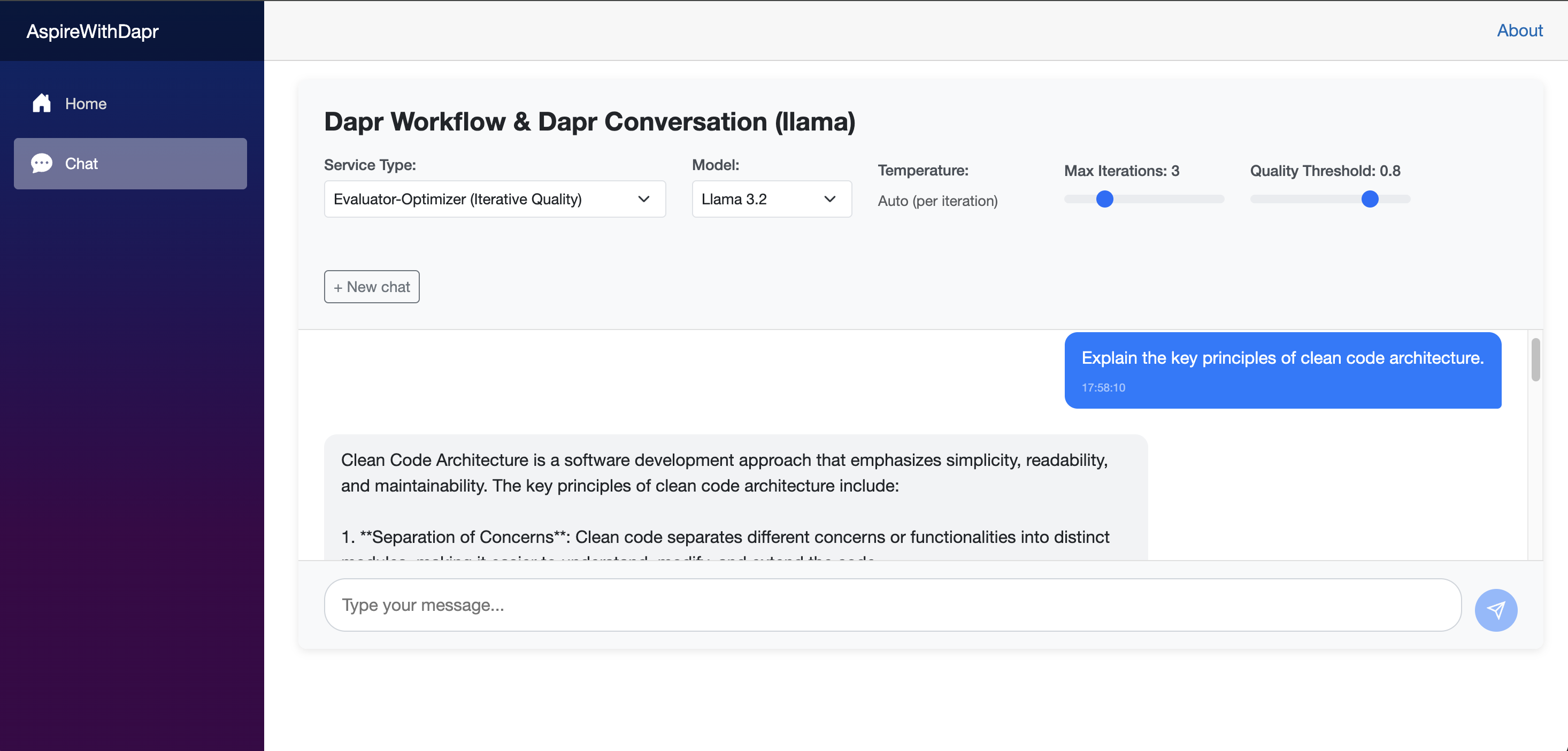

Run the web application from the Aspire Dashboard. Once it launches, select Orchestrator Workers (Task Breakdown) as the service type. You can then start a conversation and observe the application’s behavior. Using the Diagrid Dashboard, you can inspect the underlying workflow state and review historical execution data.

Now, let’s start structuring a new Minimal API for the Evaluator-Optimizer pattern.

Evaluator-Optimizer

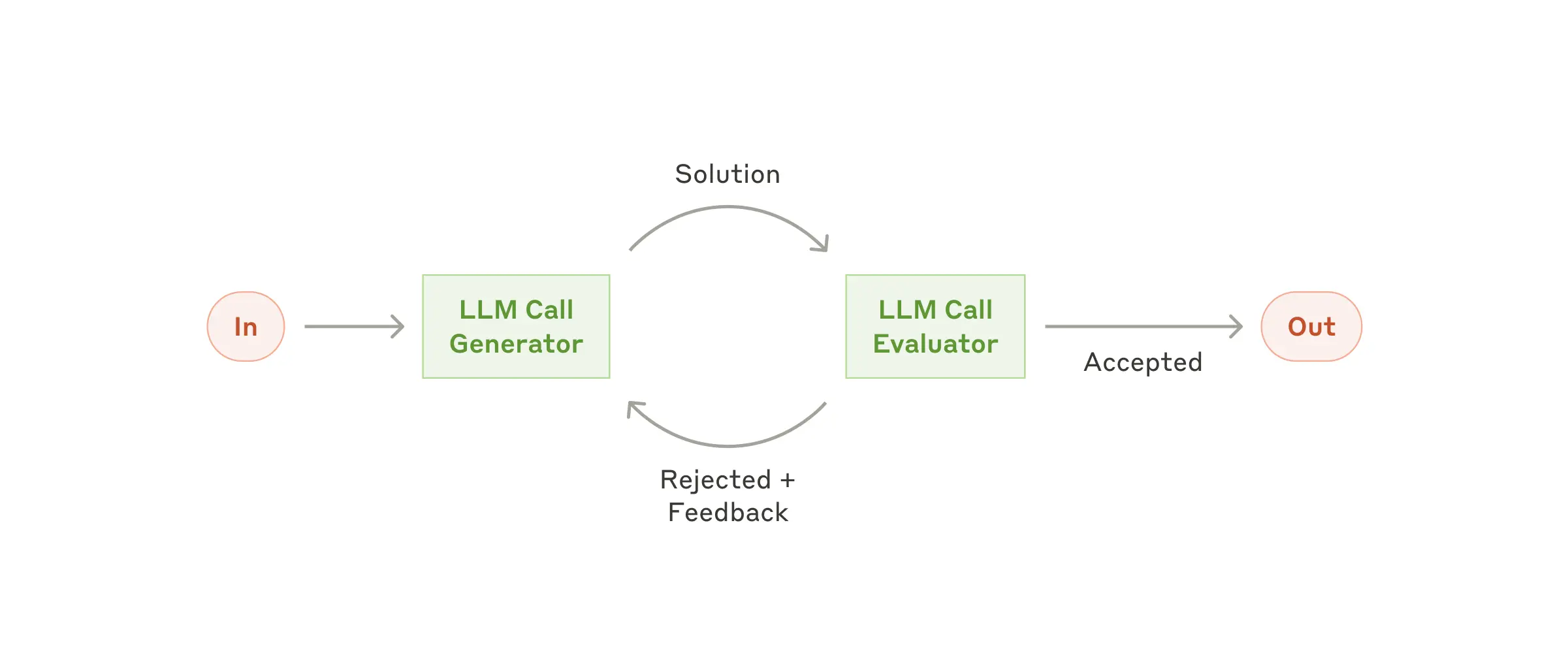

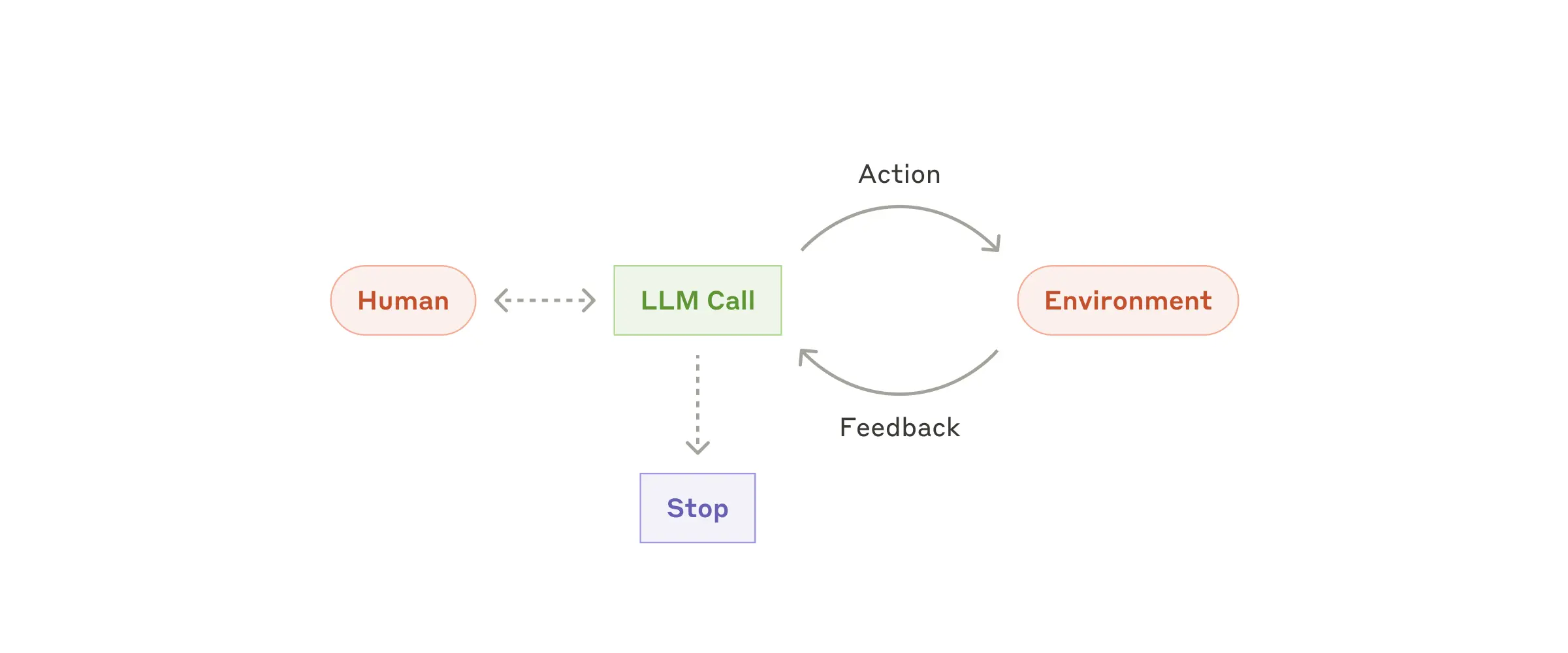

The evaluator–optimizer workflow pairs a response-generating LLM with an evaluating LLM that provides feedback in a loop to drive iterative improvement.

dotnet add package Dapr.AI

dotnet add package Dapr.Workflow// LlmModels.cs

using System.ComponentModel.DataAnnotations;

namespace AspireWithDapr.EvaluatorOptimizer.Models;

public record LlmRequest(

[Required] [MinLength(1)] string Prompt,

Dictionary<string, object>? Context = null,

string? Model = null,

double? Temperature = null);

public record LlmResponse(

string Response,

Dictionary<string, object>? Metadata = null);

public record EvaluatorRequest(

[Required] [MinLength(1)] string Prompt,

int MaxIterations = 3,

double QualityThreshold = 0.8,

Dictionary<string, object>? Context = null,

string? Model = null,

double? Temperature = null);

public record EvaluationResult(

double Score,

List<string> Improvements,

string Feedback);// Program.cs

#pragma warning disable DAPR_CONVERSATION

using System.ComponentModel.DataAnnotations;

using Dapr.AI.Conversation.Extensions;

using Dapr.Workflow;

using AspireWithDapr.EvaluatorOptimizer.Models;

using AspireWithDapr.EvaluatorOptimizer.Workflow;

var builder = WebApplication.CreateBuilder(args);

builder.AddServiceDefaults();

builder.Services.AddProblemDetails();

builder.Services.AddOpenApi();

builder.Services.AddDaprConversationClient();

builder.Services.AddDaprWorkflow(options =>

{

options.RegisterWorkflow<EvaluatorOptimizerWorkflow>();

options.RegisterActivity<CallLlmActivity>();

options.RegisterActivity<EvaluateActivity>();

options.RegisterActivity<OptimizePromptActivity>();

options.RegisterActivity<LoggingActivity>();

});

var app = builder.Build();

// Initialize workflow logger with the app's logger factory

var loggerFactory = app.Services.GetRequiredService<ILoggerFactory>();

EvaluatorOptimizerWorkflow.SetLogger(loggerFactory);

app.UseExceptionHandler();

if (app.Environment.IsDevelopment())

{

app.MapOpenApi();

}

app.MapGet("/", () => Results.Ok(new {

service = "Evaluator-Optimizer LLM API",

version = "1.0.0",

endpoints = new[] { "/llm/query", "/llm/query/{instanceId}", "/llm/query/{instanceId}/status", "/health" },

description = "Iteratively generates, evaluates, and optimizes responses until quality threshold is met"

}))

.WithName("GetServiceInfo")

.WithTags("Info");

app.MapPost("/llm/query", async (

[Required] EvaluatorRequest request,

DaprWorkflowClient workflowClient,

ILogger<Program> logger,

CancellationToken cancellationToken) =>

{

if (string.IsNullOrWhiteSpace(request.Prompt))

{

return Results.BadRequest(new { error = "Prompt is required and cannot be empty." });

}

if (request.MaxIterations < 1 || request.MaxIterations > 10)

{

return Results.BadRequest(new { error = "MaxIterations must be between 1 and 10." });

}

if (request.QualityThreshold < 0.0 || request.QualityThreshold > 1.0)

{

return Results.BadRequest(new { error = "QualityThreshold must be between 0.0 and 1.0." });

}

try

{

logger.LogInformation("Starting Evaluator-Optimizer workflow for prompt: {Prompt} with length {PromptLength} characters | Max Iterations: {MaxIterations} | Quality Threshold: {QualityThreshold}",

request.Prompt, request.Prompt.Length, request.MaxIterations, request.QualityThreshold);

var instanceId = Guid.NewGuid().ToString();

await workflowClient.ScheduleNewWorkflowAsync(

nameof(EvaluatorOptimizerWorkflow),

instanceId,

request);

logger.LogInformation("Workflow started with instance ID: {InstanceId}", instanceId);

return Results.Accepted($"/llm/query/{instanceId}", new {

instanceId,

status = "started"

});

}

catch (Exception ex)

{

logger.LogError(ex, "Error starting workflow");

return Results.Problem(

detail: "An error occurred while starting the workflow.",

statusCode: StatusCodes.Status500InternalServerError);

}

})

.WithName("StartEvaluatorOptimizerQuery")

.WithTags("LLM")

.Produces<LlmResponse>(StatusCodes.Status202Accepted)

.Produces(StatusCodes.Status400BadRequest)

.Produces(StatusCodes.Status500InternalServerError);

app.MapGet("/llm/query/{instanceId}", async (

string instanceId,

DaprWorkflowClient workflowClient,

ILogger<Program> logger,

CancellationToken cancellationToken) =>

{

try

{

var workflowState = await workflowClient.GetWorkflowStateAsync(instanceId);

if (workflowState is null)

{

return Results.NotFound(new { error = $"Workflow instance {instanceId} not found." });

}

if (workflowState.RuntimeStatus != WorkflowRuntimeStatus.Completed)

{

return Results.Accepted($"/llm/query/{instanceId}", new

{

instanceId,

runtimeStatus = workflowState.RuntimeStatus.ToString()

});

}

var output = workflowState.ReadOutputAs<LlmResponse>();

return Results.Ok(new

{

instanceId,

runtimeStatus = workflowState.RuntimeStatus.ToString(),

result = output

});

}

catch (Exception ex)

{

logger.LogError(ex, "Error retrieving workflow state for instance {InstanceId}", instanceId);

return Results.Problem(

detail: "An error occurred while retrieving the workflow state.",

statusCode: StatusCodes.Status500InternalServerError);

}

})

.WithName("GetEvaluatorOptimizerQueryResult")

.WithTags("LLM")

.Produces<LlmResponse>()

.Produces(StatusCodes.Status404NotFound)

.Produces(StatusCodes.Status500InternalServerError);

app.MapGet("/llm/query/{instanceId}/status", async (

string instanceId,

DaprWorkflowClient workflowClient,

ILogger<Program> logger,

CancellationToken cancellationToken) =>

{

try

{

var workflowState = await workflowClient.GetWorkflowStateAsync(instanceId);

if (workflowState is null)

{

return Results.NotFound(new { error = $"Workflow instance {instanceId} not found." });

}

var status = workflowState.RuntimeStatus;

return Results.Ok(new

{

instanceId,

runtimeStatus = status.ToString(),

isCompleted = status == WorkflowRuntimeStatus.Completed,

isRunning = status == WorkflowRuntimeStatus.Running,

isFailed = status == WorkflowRuntimeStatus.Failed,

isTerminated = status == WorkflowRuntimeStatus.Terminated,

isPending = status == WorkflowRuntimeStatus.Pending

});

}

catch (Exception ex)

{

logger.LogError(ex, "Error retrieving workflow status for instance {InstanceId}", instanceId);

return Results.Problem(

detail: "An error occurred while retrieving the workflow status.",

statusCode: StatusCodes.Status500InternalServerError);

}

})

.WithName("GetEvaluatorOptimizerWorkflowStatus")

.WithTags("LLM")

.Produces(StatusCodes.Status200OK)

.Produces(StatusCodes.Status404NotFound)

.Produces(StatusCodes.Status500InternalServerError);

app.MapDefaultEndpoints();

app.Run();// EvaluatorOptimizerWorkflow.cs

#pragma warning disable DAPR_CONVERSATION

using Dapr.Workflow;

using Dapr.AI.Conversation;

using Dapr.AI.Conversation.ConversationRoles;

using AspireWithDapr.EvaluatorOptimizer.Models;

using System.Text.Json;

namespace AspireWithDapr.EvaluatorOptimizer.Workflow;

public class EvaluatorOptimizerWorkflow : Workflow<EvaluatorRequest, LlmResponse>

{

private static ILogger? _logger;

public static void SetLogger(ILoggerFactory loggerFactory)

{

_logger = loggerFactory.CreateLogger<EvaluatorOptimizerWorkflow>();

}

public override async Task<LlmResponse> RunAsync(

WorkflowContext context,

EvaluatorRequest request)

{

ArgumentNullException.ThrowIfNull(request);

ArgumentException.ThrowIfNullOrWhiteSpace(request.Prompt);

// Create logger if not set, using a default factory as fallback

var logger = _logger ?? LoggerFactory.Create(builder => builder.AddConsole()).CreateLogger<EvaluatorOptimizerWorkflow>();

var workflowInstanceId = context.InstanceId;

// Log workflow start using activity to ensure it's captured

var promptPreview = request.Prompt.Length > 100 ? request.Prompt[..100] + "..." : request.Prompt;

await context.CallActivityAsync<bool>(

nameof(LoggingActivity),

new LogMessage($"[Workflow Start] InstanceId: {workflowInstanceId} | Prompt: \"{promptPreview}\" | Prompt Length: {request.Prompt.Length} | Max Iterations: {request.MaxIterations} | Quality Threshold: {request.QualityThreshold} | Model: {request.Model ?? "llama"} | Temperature: {request.Temperature ?? 0.7}"));

// Log full initial prompt for end-to-end tracking

await context.CallActivityAsync<bool>(

nameof(LoggingActivity),

new LogMessage($"[Initial Prompt] InstanceId: {workflowInstanceId}\n---\n{request.Prompt}\n---"));

var iteration = 0;

LlmResponse? bestResponse = null;

var bestScore = 0.0;

var currentPrompt = request.Prompt;

while (iteration < request.MaxIterations)

{

context.SetCustomStatus(new {

step = $"iteration_{iteration + 1}",

current_prompt_length = currentPrompt.Length,

best_score = bestScore

});

// Step 1: Generate response

await context.CallActivityAsync<bool>(

nameof(LoggingActivity),

new LogMessage($"[Iteration {iteration + 1}: Generate] InstanceId: {workflowInstanceId} | Prompt: \"{(currentPrompt.Length > 100 ? currentPrompt[..100] + "..." : currentPrompt)}\" | Prompt Length: {currentPrompt.Length}"));

LlmResponse? response = null;

try

{

response = await context.CallActivityAsync<LlmResponse>(

nameof(CallLlmActivity),

new LlmRequest(currentPrompt, request.Context, request.Model, request.Temperature));

await context.CallActivityAsync<bool>(

nameof(LoggingActivity),

new LogMessage($"[Iteration {iteration + 1}: Generate Complete] InstanceId: {workflowInstanceId} | Response Length: {response?.Response?.Length ?? 0}"));

// Log full response after generation

if (response?.Response != null)

{

await context.CallActivityAsync<bool>(

nameof(LoggingActivity),

new LogMessage($"[Response After Iteration {iteration + 1}] InstanceId: {workflowInstanceId}\n---\n{response.Response}\n---"));

}

}

catch (Exception ex)

{

logger.LogError(ex,

"[Iteration {Iteration}: Generate Failed] InstanceId: {InstanceId} | Error: {Error}",

iteration + 1,

workflowInstanceId,

ex.Message);

throw;

}

if (response == null)

{

logger.LogWarning(

"[Iteration {Iteration}: Generate Failed] InstanceId: {InstanceId} | Reason: Null response",

iteration + 1,

workflowInstanceId);

iteration++;

continue;

}

context.SetCustomStatus(new { step = $"evaluating_iteration_{iteration + 1}" });

// Step 2: Evaluate response

await context.CallActivityAsync<bool>(

nameof(LoggingActivity),

new LogMessage($"[Iteration {iteration + 1}: Evaluate] InstanceId: {workflowInstanceId} | Response Length: {response.Response?.Length ?? 0}"));

EvaluationResult? evaluation = null;

try

{

evaluation = await context.CallActivityAsync<EvaluationResult>(

nameof(EvaluateActivity),

new EvaluateRequest(request.Prompt, response.Response ?? string.Empty, request.Model, request.Temperature));

await context.CallActivityAsync<bool>(

nameof(LoggingActivity),

new LogMessage($"[Iteration {iteration + 1}: Evaluate Complete] InstanceId: {workflowInstanceId} | Score: {evaluation?.Score ?? 0.0} | Improvements Count: {evaluation?.Improvements?.Count ?? 0}"));

}

catch (Exception ex)

{

logger.LogError(ex,

"[Iteration {Iteration}: Evaluate Failed] InstanceId: {InstanceId} | Error: {Error}",

iteration + 1,

workflowInstanceId,

ex.Message);

throw;

}

if (evaluation == null)

{

logger.LogWarning(

"[Iteration {Iteration}: Evaluate Failed] InstanceId: {InstanceId} | Reason: Null evaluation",

iteration + 1,

workflowInstanceId);

iteration++;

continue;

}

context.SetCustomStatus(new {

step = $"iteration_{iteration + 1}_score",

score = evaluation.Score,

best_score = bestScore

});

// Track best response

if (evaluation.Score > bestScore)

{

bestScore = evaluation.Score;

bestResponse = response;

logger.LogInformation(

"[Iteration {Iteration}: New Best] InstanceId: {InstanceId} | Score: {Score}",

iteration + 1,

workflowInstanceId,

evaluation.Score);

}

// Check if quality threshold met

if (evaluation.Score >= request.QualityThreshold)

{

logger.LogInformation(

"[Workflow Early Exit] InstanceId: {InstanceId} | Reason: Quality threshold met | Score: {Score} | Threshold: {Threshold} | Iterations: {Iterations}",

workflowInstanceId,

evaluation.Score,

request.QualityThreshold,

iteration + 1);

context.SetCustomStatus(new {

step = "completed",

iterations = iteration + 1,

final_score = evaluation.Score,

threshold_met = true

});

// Enhance metadata with iteration information

var enhancedMetadata = new Dictionary<string, object>(bestResponse?.Metadata ?? new Dictionary<string, object>())

{

["iterations"] = iteration + 1,

["final_score"] = evaluation.Score,

["quality_threshold"] = request.QualityThreshold,

["threshold_met"] = true,

["workflow_type"] = "evaluator_optimizer"

};

await context.CallActivityAsync<bool>(

nameof(LoggingActivity),

new LogMessage($"[Workflow Complete] InstanceId: {workflowInstanceId} | Total Iterations: {iteration + 1} | Final Score: {evaluation.Score} | Threshold Met: true | Final Response Length: {bestResponse?.Response?.Length ?? 0}"));

return bestResponse! with { Metadata = enhancedMetadata };

}

// Optimize prompt for next iteration

if (iteration < request.MaxIterations - 1)

{

context.SetCustomStatus(new { step = $"optimizing_iteration_{iteration + 1}" });

await context.CallActivityAsync<bool>(

nameof(LoggingActivity),

new LogMessage($"[Iteration {iteration + 1}: Optimize] InstanceId: {workflowInstanceId} | Current Score: {evaluation.Score} | Improvements: {evaluation.Improvements?.Count ?? 0}"));

try

{

var optimizedPrompt = await context.CallActivityAsync<string>(

nameof(OptimizePromptActivity),

new OptimizePromptRequest(

request.Prompt,

currentPrompt,

response.Response ?? string.Empty,

evaluation,

request.Model,

request.Temperature));

currentPrompt = optimizedPrompt;

await context.CallActivityAsync<bool>(

nameof(LoggingActivity),

new LogMessage($"[Iteration {iteration + 1}: Optimize Complete] InstanceId: {workflowInstanceId} | Optimized Prompt Length: {currentPrompt.Length}"));

// Log full optimized prompt

await context.CallActivityAsync<bool>(

nameof(LoggingActivity),

new LogMessage($"[Optimized Prompt After Iteration {iteration + 1}] InstanceId: {workflowInstanceId}\n---\n{currentPrompt}\n---"));

}

catch (Exception ex)

{

logger.LogError(ex,

"[Iteration {Iteration}: Optimize Failed] InstanceId: {InstanceId} | Error: {Error}",

iteration + 1,

workflowInstanceId,

ex.Message);

// Continue with current prompt if optimization fails

}

}

iteration++;

}

logger.LogInformation(

"[Workflow Complete] InstanceId: {InstanceId} | Reason: Max iterations reached | Iterations: {Iterations} | Best Score: {BestScore} | Threshold: {Threshold}",

workflowInstanceId,

iteration,

bestScore,

request.QualityThreshold);

context.SetCustomStatus(new {

step = "completed_max_iterations",

final_score = bestScore,

iterations = iteration,

threshold_met = bestScore >= request.QualityThreshold

});

// Enhance metadata with iteration information

var finalMetadata = new Dictionary<string, object>(bestResponse?.Metadata ?? new Dictionary<string, object>())

{

["iterations"] = iteration,

["final_score"] = bestScore,

["quality_threshold"] = request.QualityThreshold,

["threshold_met"] = bestScore >= request.QualityThreshold,

["workflow_type"] = "evaluator_optimizer"

};

await context.CallActivityAsync<bool>(

nameof(LoggingActivity),

new LogMessage($"[Workflow Complete] InstanceId: {workflowInstanceId} | Total Iterations: {iteration} | Best Score: {bestScore} | Threshold Met: {bestScore >= request.QualityThreshold} | Final Response Length: {bestResponse?.Response?.Length ?? 0}"));

if (bestResponse == null)

{

logger.LogError(

"[Workflow Error] InstanceId: {InstanceId} | No valid response generated after all iterations",

workflowInstanceId);

return new LlmResponse(

"Failed to generate satisfactory response after all iterations.",

finalMetadata);

}

if (bestResponse.Metadata != null)

{

logger.LogDebug(

"[Workflow Final Metadata] InstanceId: {InstanceId} | Metadata: {Metadata}",

workflowInstanceId,

JsonSerializer.Serialize(finalMetadata));

}

return bestResponse with { Metadata = finalMetadata };

}

}

// Logging activity to ensure workflow logs are captured

public class LoggingActivity(ILogger<LoggingActivity> logger) : WorkflowActivity<LogMessage, bool>

{

public override Task<bool> RunAsync(WorkflowActivityContext context, LogMessage message)

{

try

{

switch (message.Level.ToLower())

{

case "error":

logger.LogError("[Workflow] {Message}", message.Message);

break;

case "warning":

logger.LogWarning("[Workflow] {Message}", message.Message);

break;

case "debug":

logger.LogDebug("[Workflow] {Message}", message.Message);

break;

default:

logger.LogInformation("[Workflow] {Message}", message.Message);

break;

}

return Task.FromResult(true);

}

catch (Exception ex)

{

logger.LogError(ex, "[LoggingActivity] Error logging message: {Message}", message.Message);

return Task.FromResult(false);

}

}

}

public record LogMessage(string Message, string Level = "Information");

// Activity to call LLM (reusing pattern from AugmentedLlm)

public class CallLlmActivity(

DaprConversationClient conversationClient,

ILogger<CallLlmActivity> logger) : WorkflowActivity<LlmRequest, LlmResponse>

{

public override async Task<LlmResponse> RunAsync(

WorkflowActivityContext context,

LlmRequest request)

{

ArgumentNullException.ThrowIfNull(conversationClient);

ArgumentNullException.ThrowIfNull(request);

ArgumentException.ThrowIfNullOrWhiteSpace(request.Prompt);

var activityName = nameof(CallLlmActivity);

var startTime = DateTime.UtcNow;

var componentName = request.Model ?? "llama";

var promptPreview = request.Prompt.Length > 150 ? request.Prompt[..150] + "..." : request.Prompt;

logger.LogInformation(

"[{Activity} Start] Model: {Model} | Temperature: {Temperature} | Prompt: \"{Prompt}\" | Prompt Length: {PromptLength}",

activityName,

componentName,

request.Temperature ?? 0.7,

promptPreview,

request.Prompt.Length);

logger.LogDebug(

"[{Activity} Input] Full Request: {Request}",

activityName,

JsonSerializer.Serialize(new

{

request.Model,

request.Temperature,

PromptLength = request.Prompt.Length,

PromptPreview = request.Prompt.Length > 200 ? request.Prompt[..200] + "..." : request.Prompt,

ContextKeys = request.Context?.Keys.ToArray() ?? Array.Empty<string>()

}));

var conversationInput = new ConversationInput(

[

new UserMessage

{

Content = [new MessageContent(request.Prompt)]

}

]);

var conversationOptions = new ConversationOptions(componentName)

{

Temperature = request.Temperature ?? 0.7

};

logger.LogDebug(

"[{Activity}] Calling LLM conversation API with component: {Component}",

activityName,

componentName);

var response = await conversationClient.ConverseAsync(

[conversationInput],

conversationOptions,

CancellationToken.None);

if (response?.Outputs is null || response.Outputs.Count == 0)

{

logger.LogError(

"[{Activity} Error] LLM returned no outputs. Response: {Response}",

activityName,

response != null ? JsonSerializer.Serialize(response) : "null");

throw new InvalidOperationException("LLM returned no outputs.");

}

logger.LogDebug(

"[{Activity}] Received {OutputCount} output(s) from LLM",

activityName,

response.Outputs.Count);

var responseText = string.Empty;

var choiceCount = 0;

foreach (var output in response.Outputs)

{

if (output?.Choices is null) continue;

foreach (var choice in output.Choices)

{

var content = choice.Message?.Content ?? string.Empty;

responseText += content;

choiceCount++;

}

}

if (string.IsNullOrWhiteSpace(responseText))

{

logger.LogError(

"[{Activity} Error] LLM returned empty response. Outputs Count: {OutputCount}, Choices Processed: {ChoiceCount}",

activityName,

response.Outputs.Count,

choiceCount);

throw new InvalidOperationException("LLM returned empty response.");

}

var duration = (DateTime.UtcNow - startTime).TotalMilliseconds;

var result = new LlmResponse(

responseText,

new Dictionary<string, object>

{

["model"] = componentName,

["temperature"] = request.Temperature ?? 0.7,

["timestamp"] = DateTime.UtcNow,

["conversationId"] = response.ConversationId ?? string.Empty

});

logger.LogInformation(

"[{Activity} Complete] Duration: {Duration}ms | Response Length: {ResponseLength} | Choices: {ChoiceCount} | ConversationId: {ConversationId}",

activityName,

duration,

responseText.Length,

choiceCount,

response.ConversationId ?? "none");

logger.LogDebug(

"[{Activity} Output] Response Preview: {Preview} | Metadata: {Metadata}",

activityName,

responseText.Length > 300 ? responseText[..300] + "..." : responseText,

JsonSerializer.Serialize(result.Metadata));

return result;

}

}

// Activity to evaluate response quality

public class EvaluateActivity(

DaprConversationClient conversationClient,

ILogger<EvaluateActivity> logger) : WorkflowActivity<EvaluateRequest, EvaluationResult>

{

public override async Task<EvaluationResult> RunAsync(

WorkflowActivityContext context,

EvaluateRequest request)

{

ArgumentNullException.ThrowIfNull(conversationClient);

ArgumentNullException.ThrowIfNull(request);

ArgumentException.ThrowIfNullOrWhiteSpace(request.OriginalPrompt);

ArgumentException.ThrowIfNullOrWhiteSpace(request.Response);

var activityName = nameof(EvaluateActivity);

var startTime = DateTime.UtcNow;

var componentName = request.Model ?? "llama";

var promptPreview = request.OriginalPrompt.Length > 100 ? request.OriginalPrompt[..100] + "..." : request.OriginalPrompt;

var responsePreview = request.Response.Length > 100 ? request.Response[..100] + "..." : request.Response;

logger.LogInformation(

"[{Activity} Start] Original Prompt: \"{Prompt}\" | Prompt Length: {PromptLength} | Response Length: {ResponseLength} | Model: {Model} | Temperature: {Temperature}",

activityName,

promptPreview,

request.OriginalPrompt.Length,

request.Response.Length,

componentName,

request.Temperature ?? 0.4);

logger.LogDebug(

"[{Activity} Input] Original Prompt: {Prompt} | Response Preview: {ResponsePreview}",

activityName,

request.OriginalPrompt,

request.Response.Length > 200 ? request.Response[..200] + "..." : request.Response);

var evaluationPrompt = $"""

Original prompt: {request.OriginalPrompt}

Generated response: {request.Response}

Evaluate this response (score 0.0 to 1.0) based on:

1. Relevance to original prompt

2. Completeness

3. Clarity

4. Accuracy

Provide:

1. Score (just the number)

2. List of improvements (one per line)

3. Brief feedback

Format:

SCORE: [score]

IMPROVEMENTS:

[improvement1]

[improvement2]

...

FEEDBACK: [feedback]

""";

var conversationInput = new ConversationInput(

[

new UserMessage

{

Content = [new MessageContent(evaluationPrompt)]

}

]);

var conversationOptions = new ConversationOptions(componentName)

{

Temperature = request.Temperature ?? 0.4

};

logger.LogDebug(

"[{Activity}] Calling LLM conversation API with component: {Component}",

activityName,

componentName);

var response = await conversationClient.ConverseAsync(

[conversationInput],

conversationOptions,

CancellationToken.None);

if (response?.Outputs is null || response.Outputs.Count == 0)

{

logger.LogError(

"[{Activity} Error] LLM returned no outputs. Response: {Response}",

activityName,

response != null ? JsonSerializer.Serialize(response) : "null");

throw new InvalidOperationException("LLM returned no outputs for evaluation.");

}

logger.LogDebug(

"[{Activity}] Received {OutputCount} output(s) from LLM",

activityName,

response.Outputs.Count);

var responseText = string.Empty;

var choiceCount = 0;

foreach (var output in response.Outputs)

{

if (output?.Choices is null) continue;

foreach (var choice in output.Choices)

{

var content = choice.Message?.Content ?? string.Empty;

responseText += content;

choiceCount++;

}

}

if (string.IsNullOrWhiteSpace(responseText))

{

logger.LogError(

"[{Activity} Error] LLM returned empty response. Outputs Count: {OutputCount}, Choices Processed: {ChoiceCount}",

activityName,

response.Outputs.Count,

choiceCount);

throw new InvalidOperationException("LLM returned empty response for evaluation.");

}

// Parse evaluation result

var lines = responseText.Split('\n', StringSplitOptions.RemoveEmptyEntries);

var score = 0.0;

var improvements = new List<string>();

var feedback = string.Empty;

var inImprovements = false;

foreach (var line in lines)

{

var trimmedLine = line.Trim();

if (trimmedLine.StartsWith("SCORE:", StringComparison.OrdinalIgnoreCase))

{

var scoreText = trimmedLine.Replace("SCORE:", "", StringComparison.OrdinalIgnoreCase).Trim();

if (double.TryParse(scoreText, out var parsedScore))

{

score = Math.Clamp(parsedScore, 0.0, 1.0);

}

}

else if (trimmedLine.StartsWith("IMPROVEMENTS:", StringComparison.OrdinalIgnoreCase))

{

inImprovements = true;

}

else if (trimmedLine.StartsWith("FEEDBACK:", StringComparison.OrdinalIgnoreCase))

{

feedback = trimmedLine.Replace("FEEDBACK:", "", StringComparison.OrdinalIgnoreCase).Trim();

inImprovements = false;

}

else if (inImprovements && !string.IsNullOrWhiteSpace(trimmedLine))

{

improvements.Add(trimmedLine);

}

}

// If no feedback was found, use the remaining text

if (string.IsNullOrWhiteSpace(feedback) && !inImprovements)

{

var feedbackStart = responseText.IndexOf("FEEDBACK:", StringComparison.OrdinalIgnoreCase);

if (feedbackStart >= 0)

{

feedback = responseText.Substring(feedbackStart + 9).Trim();

}

}

var duration = (DateTime.UtcNow - startTime).TotalMilliseconds;

var result = new EvaluationResult(score, improvements, feedback);

logger.LogInformation(

"[{Activity} Complete] Duration: {Duration}ms | Score: {Score} | Improvements Count: {ImprovementsCount} | Choices: {ChoiceCount} | ConversationId: {ConversationId}",

activityName,

duration,

score,

improvements.Count,

choiceCount,

response.ConversationId ?? "none");

logger.LogDebug(

"[{Activity} Output] Score: {Score} | Improvements: {Improvements} | Feedback: {Feedback}",

activityName,

score,

JsonSerializer.Serialize(improvements),

feedback.Length > 200 ? feedback[..200] + "..." : feedback);

return result;

}

}

// Activity to optimize prompt for next iteration

public class OptimizePromptActivity(

DaprConversationClient conversationClient,

ILogger<OptimizePromptActivity> logger) : WorkflowActivity<OptimizePromptRequest, string>

{

public override async Task<string> RunAsync(

WorkflowActivityContext context,

OptimizePromptRequest request)

{

ArgumentNullException.ThrowIfNull(conversationClient);

ArgumentNullException.ThrowIfNull(request);

ArgumentException.ThrowIfNullOrWhiteSpace(request.OriginalPrompt);

ArgumentNullException.ThrowIfNull(request.Evaluation);

var activityName = nameof(OptimizePromptActivity);

var startTime = DateTime.UtcNow;

var componentName = request.Model ?? "llama";

var promptPreview = request.OriginalPrompt.Length > 100 ? request.OriginalPrompt[..100] + "..." : request.OriginalPrompt;

logger.LogInformation(

"[{Activity} Start] Original Prompt: \"{Prompt}\" | Current Prompt Length: {CurrentLength} | Response Length: {ResponseLength} | Score: {Score} | Improvements Count: {ImprovementsCount} | Model: {Model} | Temperature: {Temperature}",

activityName,

promptPreview,

request.CurrentPrompt.Length,

request.Response.Length,

request.Evaluation.Score,

request.Evaluation.Improvements?.Count ?? 0,

componentName,

request.Temperature ?? 0.7);

logger.LogDebug(

"[{Activity} Input] Original Prompt: {OriginalPrompt} | Current Prompt: {CurrentPrompt} | Response: {Response} | Evaluation: {Evaluation}",

activityName,

request.OriginalPrompt,

request.CurrentPrompt,

request.Response.Length > 200 ? request.Response[..200] + "..." : request.Response,

JsonSerializer.Serialize(new

{

request.Evaluation.Score,

request.Evaluation.Improvements,

FeedbackLength = request.Evaluation.Feedback?.Length ?? 0

}));

var improvementsText = request.Evaluation.Improvements != null && request.Evaluation.Improvements.Count > 0

? string.Join("\n", request.Evaluation.Improvements.Select((imp, i) => $"{i + 1}. {imp}"))

: "None specified";

var optimizationPrompt = $"""

Original goal: {request.OriginalPrompt}

Current prompt: {request.CurrentPrompt}

Generated response: {request.Response}

Evaluation score: {request.Evaluation.Score}

Improvements needed: {improvementsText}

Feedback: {request.Evaluation.Feedback}

Optimize the prompt to address the evaluation feedback.

Create a new, improved prompt that will likely generate a better response.

Focus on clarity, specificity, and addressing the noted improvements.

Optimized prompt:

""";

var conversationInput = new ConversationInput(

[

new UserMessage

{

Content = [new MessageContent(optimizationPrompt)]

}

]);

var conversationOptions = new ConversationOptions(componentName)

{

Temperature = request.Temperature ?? 0.7

};

logger.LogDebug(

"[{Activity}] Calling LLM conversation API with component: {Component}",

activityName,

componentName);

var response = await conversationClient.ConverseAsync(

[conversationInput],

conversationOptions,

CancellationToken.None);

if (response?.Outputs is null || response.Outputs.Count == 0)

{

logger.LogError(

"[{Activity} Error] LLM returned no outputs. Response: {Response}",

activityName,

response != null ? JsonSerializer.Serialize(response) : "null");

throw new InvalidOperationException("LLM returned no outputs for prompt optimization.");

}

logger.LogDebug(

"[{Activity}] Received {OutputCount} output(s) from LLM",

activityName,

response.Outputs.Count);

var responseText = string.Empty;

var choiceCount = 0;

foreach (var output in response.Outputs)

{

if (output?.Choices is null) continue;

foreach (var choice in output.Choices)

{

var content = choice.Message?.Content ?? string.Empty;

responseText += content;

choiceCount++;

}

}

if (string.IsNullOrWhiteSpace(responseText))

{

logger.LogError(

"[{Activity} Error] LLM returned empty response. Outputs Count: {OutputCount}, Choices Processed: {ChoiceCount}",

activityName,

response.Outputs.Count,

choiceCount);

throw new InvalidOperationException("LLM returned empty response for prompt optimization.");

}

var optimizedPrompt = responseText.Trim();

var duration = (DateTime.UtcNow - startTime).TotalMilliseconds;

var optimizedPreview = optimizedPrompt.Length > 150 ? optimizedPrompt[..150] + "..." : optimizedPrompt;

logger.LogInformation(

"[{Activity} Complete] Duration: {Duration}ms | Optimized Prompt: \"{OptimizedPrompt}\" | Optimized Prompt Length: {OptimizedLength} | Choices: {ChoiceCount} | ConversationId: {ConversationId}",

activityName,

duration,

optimizedPreview,

optimizedPrompt.Length,

choiceCount,

response.ConversationId ?? "none");

logger.LogDebug(

"[{Activity} Output] Optimized Prompt Preview: {Preview}",

activityName,

optimizedPrompt.Length > 300 ? optimizedPrompt[..300] + "..." : optimizedPrompt);

return optimizedPrompt;

}

}

// Request/Response models for activities

public record EvaluateRequest(

string OriginalPrompt,

string Response,

string? Model = null,

double? Temperature = null);

public record OptimizePromptRequest(

string OriginalPrompt,

string CurrentPrompt,

string Response,

EvaluationResult Evaluation,

string? Model = null,

double? Temperature = null);If you walk through the code, you will see that it implements a Dapr Workflow based on the Evaluator-Optimizer pattern. This pattern implements an iterative refinement pattern that generates LLM responses, evaluates their quality, and optimizes prompts until a quality threshold is met or max iterations are reached.

The workflow consists of three main steps (activities):

- Call LLM – Sends prompts to the LLM via

DaprConversationClientand returns the generated response with metadata (model, temperature, timestamp, conversationId). - Evaluate – Asks the LLM to score (0.0-1.0) a response based on relevance, completeness, clarity, and accuracy. Returns score, improvement suggestions, and feedback.

- Optimize Prompt – Takes the original prompt, current response, and evaluation feedback to generate an improved prompt for the next iteration.

NOTE: Before running the application, ensure that the EvaluatorOptimizer project is added to the AppHost and that the web application’s reference is updated accordingly. Refer to the code snippet below.

// AppHost.cs

// Pattern - 6. EvaluatorOptimizer

var evaluatorOptimizerService = builder.AddProject<Projects.AspireWithDapr_EvaluatorOptimizer>("EvaluatorOptimizer")

.WithExternalHttpEndpoints()

.WithHttpHealthCheck("/health")

.WithDaprSidecar(new DaprSidecarOptions

{

ResourcesPaths = ["components"],

Config = "components/daprConfig.yaml"

})

.WithEnvironment("DAPR_HOST_IP", "127.0.0.1")